How AI Agents Talk to Tools: A Visual Understanding of Model Context Protocol (MCP)

Every AI agent needs tools. But every integration feels custom. What if there was a shared protocol for agent-tool communication? Here’s a visual introduction to how the Model Context Protocol (MCP)

AI agents are entering a new era.

From OpenAI’s Agents SDK to Claude Code and Manus AI, the agentic AI ecosystem is exploding.

Devs are building autonomous agents that can reason, use tools, and orchestrate tasks — across browsers, terminals, IDEs, and apps. But here’s the problem:

⚠️ Every agent-tool integration is still custom and brittle.

There’s no shared communication standard, so developers are stuck wiring together APIs, SDKs, and hardcoded endpoints by hand.

Enter: The Model Context Protocol (MCP)

MCP is a lightweight, open protocol introduced by Anthropic that standardizes how AI agents communicate with external tools.

Built on familiar primitives like HTTP and JSON-RPC 2.0, it separates:

the Agent (client) from

the Tool (server)

This lets you plug-and-play tools like you would USB devices—without rewriting business logic every time.

Let’s explore how it works. 👇

🧩 What Is MCP?

The Model Context Protocol (MCP) is an open communication protocol that defines how AI agents (clients) can interact with external tools, resources, and prompts (servers) using a shared interface.

Think of it like HTTP or ODBC for AI agents.

Clients don’t need to know how the tool works—just how to speak MCP.

Servers declare what services they offer and expose them over standardized schemas.

Hosts can swap tools in and out without rewriting agent logic.

👉 New to MCPs? Explore our introductory guide to MCPs in this post.

🧠 What is the Model Context Protocol (MCP) Architecture?

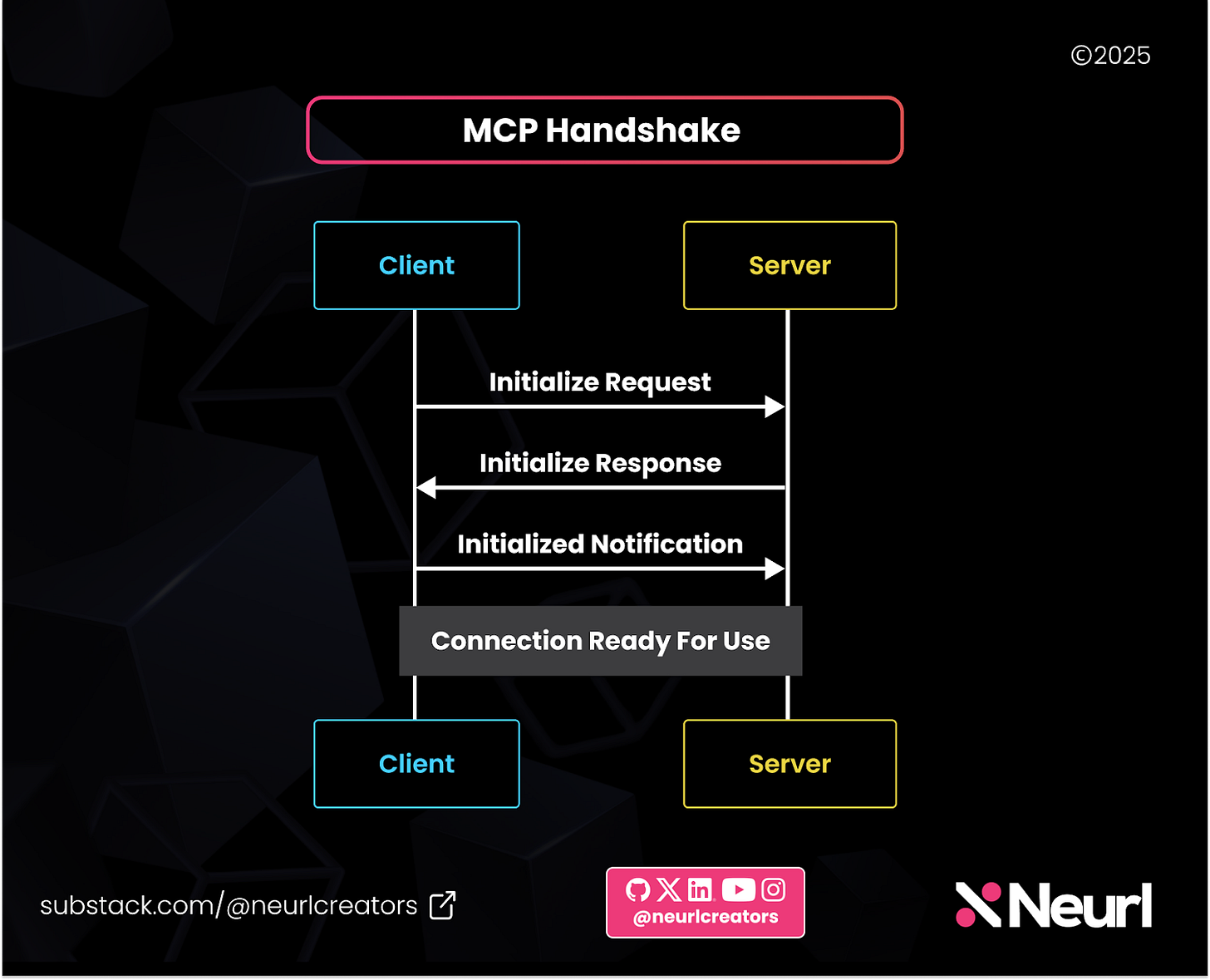

MCP enables an agent to invoke external tools in a standardized way.

It brings structure to how tools are exposed, how agents discover them, and how the model uses their outputs to generate better results.

🔁 How MCP Works: Host, Client, and Server

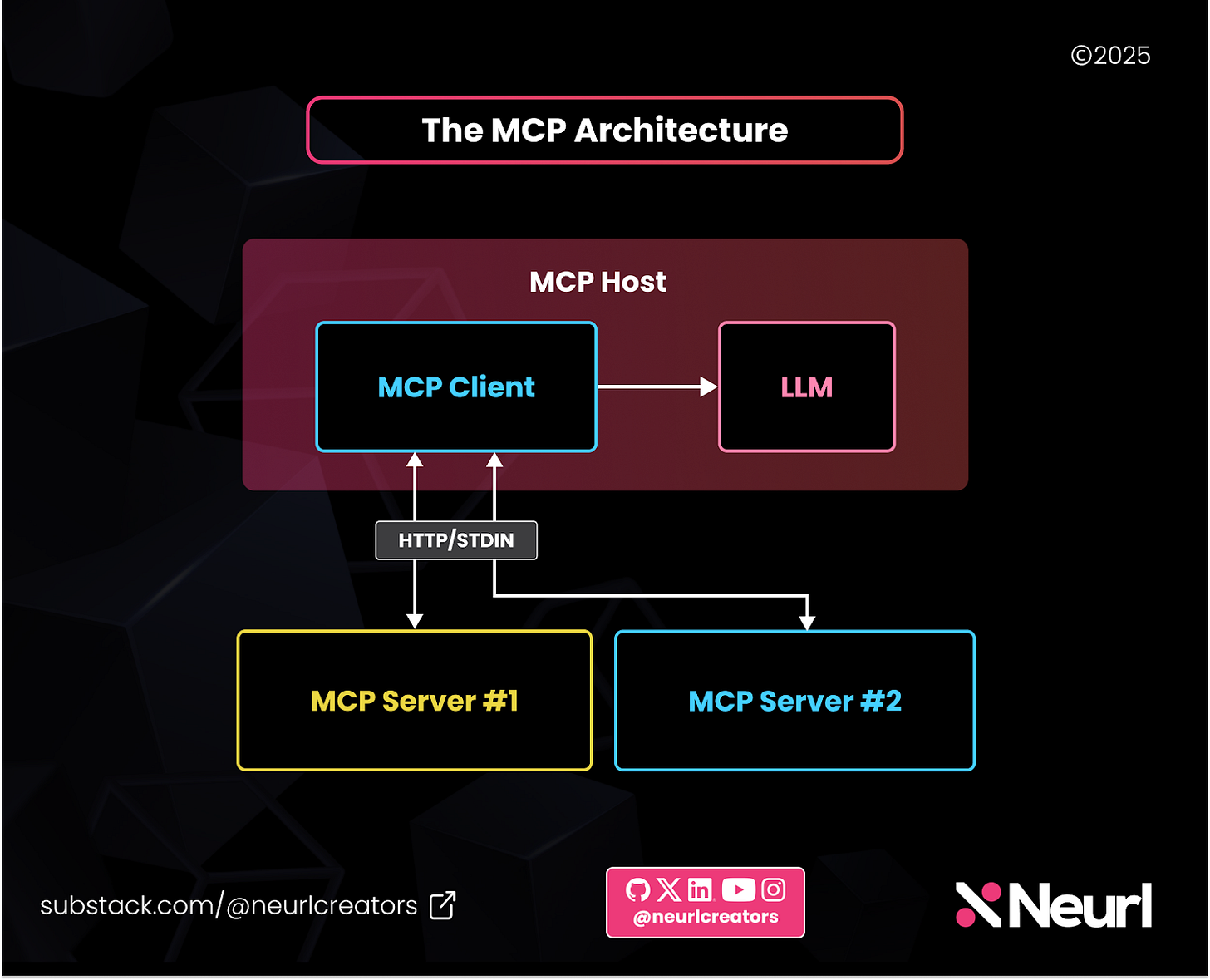

MCP follows a client-server architecture, but with a layout optimized for LLM-based applications:

The Host is the application (e.g., Cursor, Claude Desktop) containing an LLM and an MCP client.

The MCP Client is embedded inside the Host application and handles external communication with one or more MCP servers.

MCP Servers provide tools, files, or prompts via the MCP protocol that augment the agent’s (Client) capabilities.

🧠 Key Insight:

The client doesn’t need to know how each tool works. It just needs to speak MCP. That’s the magic of decoupling.

💻 Real Example: Cursor as an MCP Host

Take Cursor, an IDE powered by multiple LLMs. Cursor:

Embeds LLMs like Claude or GPT

Acts as the host

Runs an embedded MCP client

Connects to external MCP Servers that provide capabilities like “Summarize code,” “Run tests,” or “Query GitHub”

For example, when you provide a local script that uses a code refactoring tool, another tool can fetch GitHub issues. Both plug into Cursor with zero special integration logic.

🧠 Key Concept:

You can approve or block tools inside the Cursor UI—because tools are dynamically provided via the MCP server.

📡 MCP Transports: How Clients and Servers Talk

MCP supports two types of transport mechanisms that determine how the host communicates with servers:

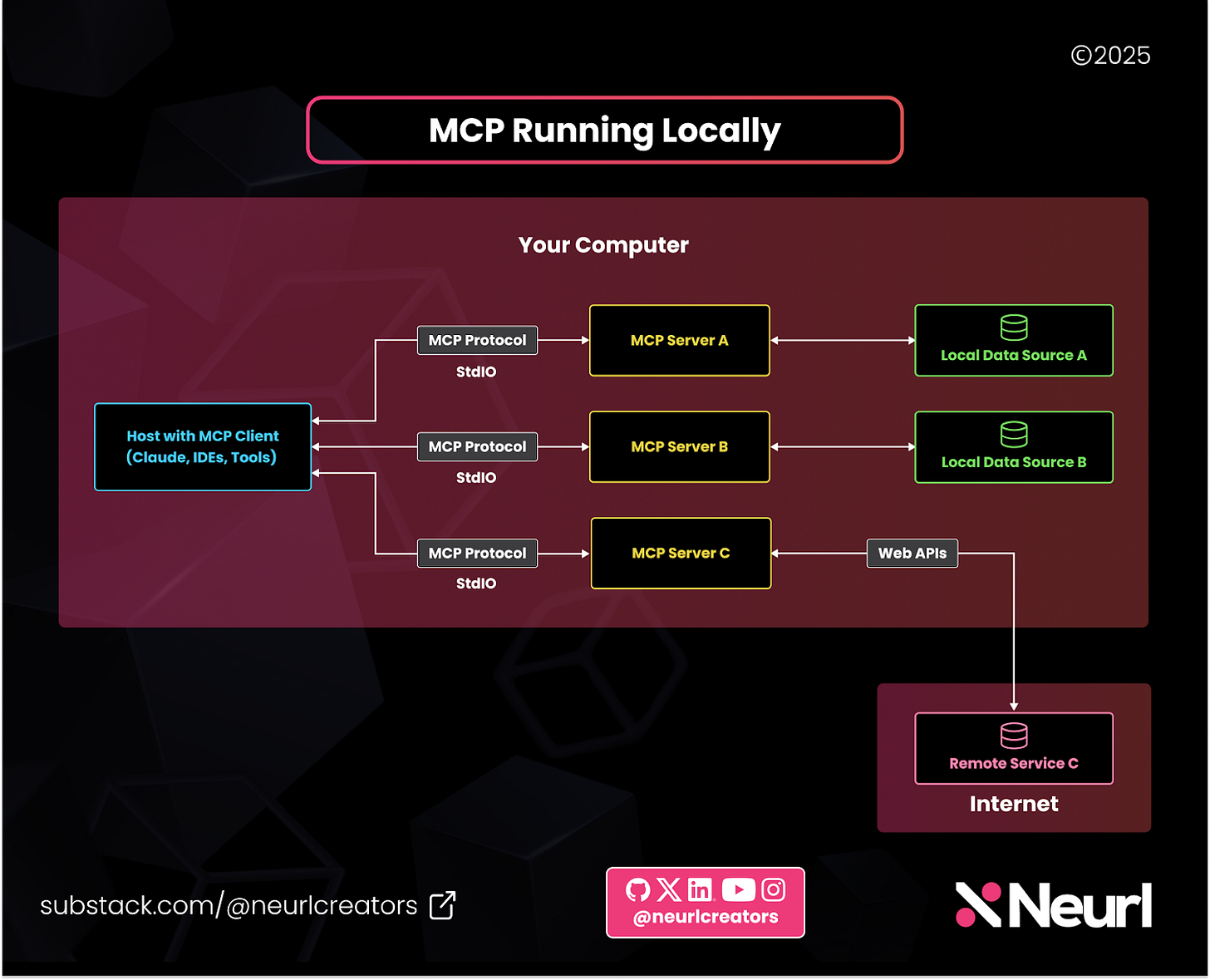

🔌 1. Local Communication (STDIN/STDOUT)

When the MCP host and server run on the same machine, they communicate via standard input/output (stdin/stdout).

This enables:

Running tools as terminal scripts

Super fast, low-latency communication

Easy debugging for developers

Example setup:

Host: Claude Desktop or Cursor

Server: A local Python script that returns the current time in a given timezone

The host launches the script as a subprocess and streams requests to it. This allows you to run small tools as local scripts—zero deployment needed.

🌐 2. Remote Communication (HTTP + SSE)

For remote tools, MCP uses:

HTTP POST: for sending initial tool requests

Server-Sent Events (SSE): for the server to stream responses back to the client

This enables:

Real-time streaming

Bi-directional communication—a major upgrade over classic REST APIs

Long-running or complex operations

Only requirement: the client must initiate the first connection.

🧪 Example: Cursor sends a tool invocation request to an online MCP server (e.g., Node.js) that streams back AI-powered suggestions over SSE or HTTP.

🧰 What Services Can an MCP Server Provide?

Each MCP server declares a list of services that a client can access. There are three types:

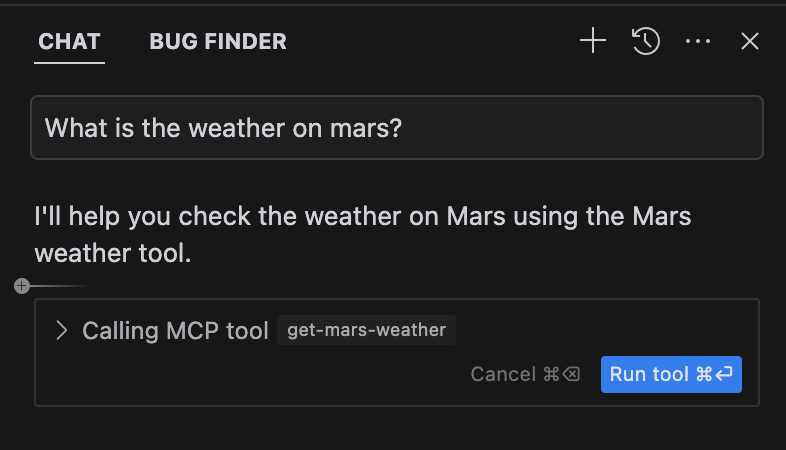

🔧 1. Tools

Tools are callable actions, like cloud functions—but externalized and standardized.

Example: getWeather(location) → Defined on the MCP server → The client invokes it → The result is passed into the LLM’s context.

{

"name": "get_time_in_timezone",

"input_schema": {

"type": "object",

"properties": {

"timezone": { "type": "string" }

},

"required": ["timezone"]

}

}These tools are reusable, swappable, and not tied to any one model provider.

📂 2. Resources

Resources are external data objects—text, JSON, files, images—that the model can access.

📝 Plain text files(e.g., code snippets, articles)

🧠 JSON / XML / CSV blobs

📄 PDFs

🌇 Images

🎧 Audio or video metadata

Example: An LLM wants to answer questions about a user's uploaded transcript—it can request that transcript as a resource via MCP.

Resources are streamed into the model’s context when needed. MCP standardizes how these resources are delivered to the LLM host, regardless of source.

✍️ 3. Prompts

Prompts are reusable instructions or templates that are stored and exposed by the MCP server.

"Summarize this article in 3 bullet points: {{content}}"

Clients fetch the template, fill in the dynamic content, and inject it into the model’s context—without hardcoding prompts into their app.

📍 Why MCP Is a Big Deal

🔌 Plug-and-Play: Easily integrate or swap external tools

🧱 Modular: Tools don’t care what model or app is calling; so define tools once, reuse across hosts

🌐 Language-agnostic: Build servers in Python, Node, Rust

🧠 Model-agnostic: Works with Claude, GPT, Gemini, etc.

MCP lets you scale agent capabilities without rewriting agent logic or hardwiring tools into your app.

👇 Coming Up Next

Now that you understand the architecture of MCP, it’s time to get hands-on.

In Part 2, we’ll show you how to:

Build your own MCP server (in Python)

Connect it to a client via stdio

Power it with Claude as an LLM host

Run a working REPL where your agent calls tools dynamically

You’ll go from zero to having a Claude agent that knows how to use real tools — locally or remotely.

📬 Subscribe for Part 2

Want to see how it all comes together?