🗣️ Voice Agents Evals, Claude Code’s Grep vs RAG, 90%-generated AI Code Hype, Cloud LLM Deploys, and Token “Scams”

Community Insights #9: Evaluating voice bots, the grep vs. vector search grudge match, whether AI really writes 90% of code, easiest EU-friendly LLM hosting, and why OpenAI’s token counts look doubled

Every other Thursday, we surface the most thought-provoking, practical, and occasionally spicy conversations happening inside the AI communities so you never miss out. The MLOps Community Slack is where the sharpest builders and tinkerers ask the hard questions and don't settle for surface-level answers.

In this edition of 🗣️ BuildAIers Talking 🗣️, here are five conversations you don’t want to miss:

Are voice agents finally good enough for production or still just annoying bots?

Will grep-based retrieval survive the RAG hype wave?

Can AI really write 90% of your code, or is that just VC naivety?

What’s the cheapest and easiest way to serve your LLM in the EU?

Why does OpenAI’s token usage double with multiple tool calls?

Grab a coffee ☕ and dive in, learn what others are actually shipping, and decide what’s hype vs. helpful. 👇

🎙️ Voice AI in Production: Is Evaluating a “Human-Sounding” Agent Still a Nightmare?

Channel: #agents

Asked by: Anil Muppalla

Anil Muppalla asked how teams are measuring quality and catching failures when they deploy voice agents in the wild.

Key Insights

Cody Peterson: “They kinda suck with unnatural flow… users get annoyed once they realize it’s a bot, and evaluation basically means talking to it live.”

DIY evals: Cody built pytest and pre-recorded audio tests; Bland.ai and HammingAI got shout-outs, but there is no clear winner as of yet.

Demetrios flagged Coval as one startup laser-focused on automated voice-agent evals.

Reality check: Even the startups building voice stacks admit evaluation is still the hardest unsolved piece.

Editor’s Takeaways

Voice (TTS/LVAS stacks) is still the hardest modality to work with because evaluating intent flow, latency, and how human-like it is still requires painful manual QA. Budget time for that or risk frustrated callers with the “sorry, can you repeat that?” moments.

And if you’re going to production, invest early in a repeatable evaluation process with golden test calls, structured rubrics (latency, interruption handling, turn-taking), and post-call scoring.

If you’re betting on phone-based agents, also keep an eye on niche tooling like Coval.

🔗 Jump to the thread → ↗️

🧭 Code Search for Agents: Is Grep-Only Retrieval Enough or Do You Need Vector/Semantic Search?

Channel: #discussions

Started by: Mirco Bianchini

Is Claude Code’s grep-only retrieval burning too many tokens? A recent post from Milvus argues yes—vector retrieval saves cost and improves precision—while LlamaIndex released semtools, a local semantic search CLI (no vector DB required).

Key Insights

Mehdi B: Milvus take is biased. In practice, grep works ~99% of the time when used well, but RAG adds complexity, especially in fast-moving repos. For structural code tasks, consider AST/Tree-sitter, not embeddings.

Sugam Garg: “This feels like overengineering. Don’t introduce complex systems for the last 1%.”

Mirco: Testing semtools as a sub-agent; also constraining Claude to targeted files helps.

Editor’s Takeaways

For many codebases, smarter grep with structure-aware tools (AST/Tree-sitter) beats full-blown RAG.

Consider local semantic search when repos are huge or cross-cutting context matters, but be mindful of maintenance overhead (branch drift, reindexing).

🔗 See the debate → ↗️

💻 Is AI Really Writing 90% of Code Now, or Are We Just Reviewing More Garbage Faster?

Channel: #discussions

Started by: Mehdi B

Six months following Dario Amodei’s ambitious “90% of code” prediction, the community evaluates the progress: are we facing a reality check, or are we on track?

Key Insights

Mariana: “For me, I'm in the phase of fixing the code that was 90% written by AI, and I can't really use AI for that.”

Mehdi B: Run cross-reviews with models (e.g., draft with Claude Sonnet, review with OpenAI’s o3) to catch issues and resolve problems.

Médéric: It’s hype. AI is a productivity booster (~35%), not an autonomous engineer (yet). Today’s assistants don’t adapt to team style/architecture.

Yasin: Parallelize AI attempts, auto-reject weak PRs, and shift human work to spec + review. Both “AI writes most code” and “humans review everything” can be true.

Varshit: Questions the economic trajectory because if margins aren’t there, investment (and quality) may plateau, just like search/social.

Editor’s Takeaways

Treat AI coding assistants/agents today as a force multiplier, not an autopilot, with humans still owning quality control and code reviews.

Although tools like Augment Code, Claude Code, and Cursor are providing a productivity boost with features that adapt to project-specific styles and contexts.

Practical workflows: The teams winning now are spec-first, run parallel attempts, and enforce multi-model review with different models for generation vs. verification.

🚀 Where Should You Host a Tiny (200 M) Model? Big Cloud or GPU Start-ups?

Channel: #llmops

Asked by: Valdimar Eggertsson

Valdimar’s 200M model is fast on Mac but 10× slower on k8s. What’s the easiest way to host it (preferably in Europe)?

Key Insights

First fix: Valdimar squeezed a 30× speedup after realizing the Python path was single-threaded and made it async. 🔥

Cloud options:

GCP GKE AI Infra (one-click-ish GPU on GKE): https://cloud.google.com/kubernetes-engine/docs/integrations/ai-infra

Nebius (EU), Runpod, Lambda, and DigitalOcean: cheaper GPUs if you can handle tradeoffs.

Modal: great dev experience; strong LLM hosting guides; pricier, some lock-in.

Notes from the field:

Patrick: Avoid big clouds unless forced; they’re pricier, and availability is weaker.

Médéric Hurier recommended GCP GKE’s one-click deploy, but warned of GPU availability issues.

Michal: Nebius support is strong; Lambda with long-term contracts can be cheaper.

Ben: Modal’s LLM Almanac is a handy sizing tool for planning throughput/latency: https://modal.com/llm-almanac/advisor

Editor’s Takeaways

Sometimes the cheapest “cloud” upgrade is profiling your code. If you still need GPUs and deploy in the EU, niche providers like Modal, DigitalOcean, Nebius, Lambda, and Runpod can undercut AWS/GCP by 2×–4×.

Only consider using GKE/EKS if your organization requires it and you can justify the cost. If you want the easiest path to prod, Modal + vLLM is hard to beat, according to Ben Epstein.

🔗 Explore the thread → ↗️

💸 Is OpenAI Charging Double Tokens When You Use Multiple Tool Calls?

Channel: #llmops

Asked by: Valdimar Eggertsson

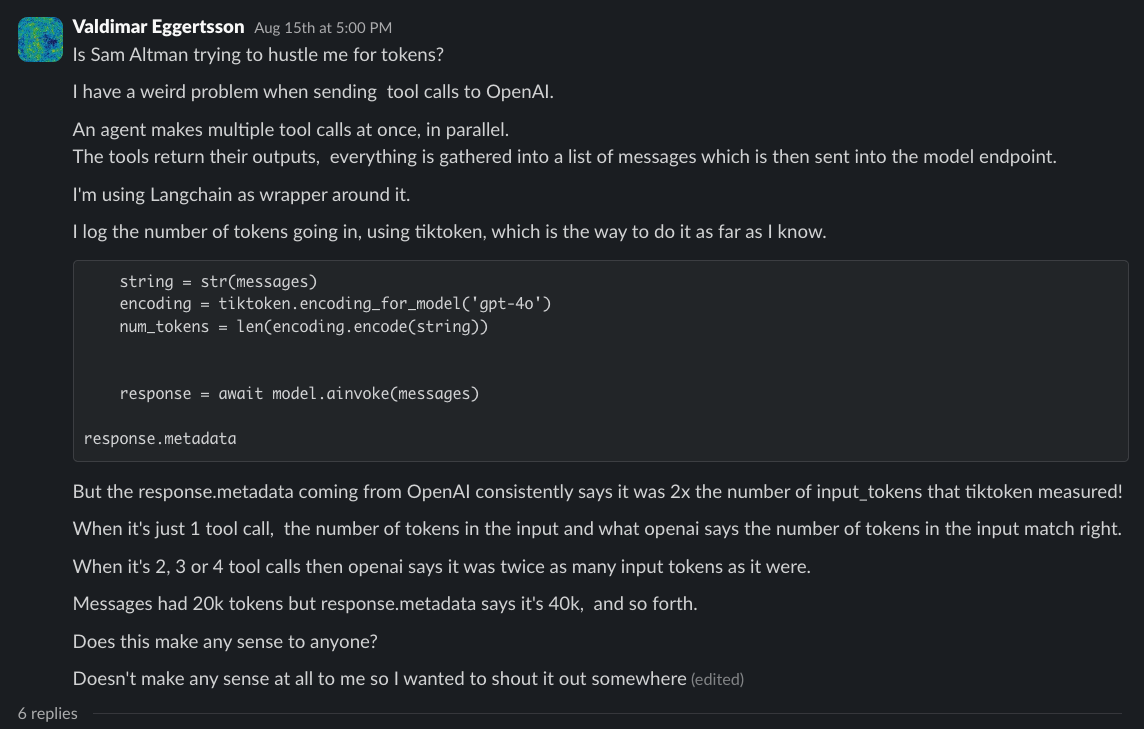

Valdimar noticed something odd: when sending multiple tool calls via LangChain to OpenAI, the response metadata token count is ~2× what tiktoken reports, and only for multi-tool-call messages.

Key Insights

Structured chat messages (role=assistant/tool + tool call payloads) inflate tokenization versus raw strings.

Sam Z.: Tokenizers change often. tiktoken can drift from provider-side tokenizers.

Valdimar: For a single tool call, it matches exactly; 2+ tool calls → double counted (in metadata). Seems systematic.

Working theory: OpenAI counts structured roles and tool content differently than your pre-count, especially with parallel tool calls.

Editor’s Takeaways

If you’re cost-sensitive and using parallel tool calls, measure what OpenAI bills, not what tiktoken predicts.

Consider flattening tool content, minimizing payloads, or testing a direct API call (no wrapper) to isolate the discrepancy.

🔗 Discover cost-saving strategies → ↗️

Which community discussions should we cover next? Drop suggestions below!

We created ⚡ BuildAIers Talking to be the pulse of the AI builder community—a space where we share and amplify honest conversations, lessons, and insights from real builders. Every other Thursday!

📌 Key Goals for Every Post:

✅ Bring the most valuable discussions from AI communities to you.✅ Build a sense of peer learning and collaboration.

✅ Give you and other AI builders a platform to share your voices and insights.

The discussions and insights in this post originally appeared in the MLOps Community Newsletter as part of our weekly column contributions for the community (published every Tuesday and Thursday).

Want more deep dives into MLOps conversations, debates, and insights? Join the Slack community here; Slack sign-up is required.

Subscribe to the MLOps Community Newsletter (20,000+ builders) delivered straight to your inbox every Tuesday and Thursday! 🚀

Know someone who would love this? Share it with your AI builder friends!