How to Build a Claude-Powered AI Agent with the Model Context Protocol (MCP)

BuildAIers Toolkit #4: Build an MCP server, client, and Claude-powered AI host that can call tools, retrieve context, and generate dynamic replies.

If you’ve been following how AI agents are evolving—from OpenAI’s Agents SDK to Claude’s tool-use capabilities—you’ve likely noticed one major trend:

Modern agents aren’t just chatting anymore. They’re calling tools, invoking APIs, and orchestrating actions in the real world.

But the real magic doesn’t happen inside the language model—it happens at the edges, in the way these agents interface with tools, data, and services.

That’s where the Model Context Protocol (MCP) comes in.

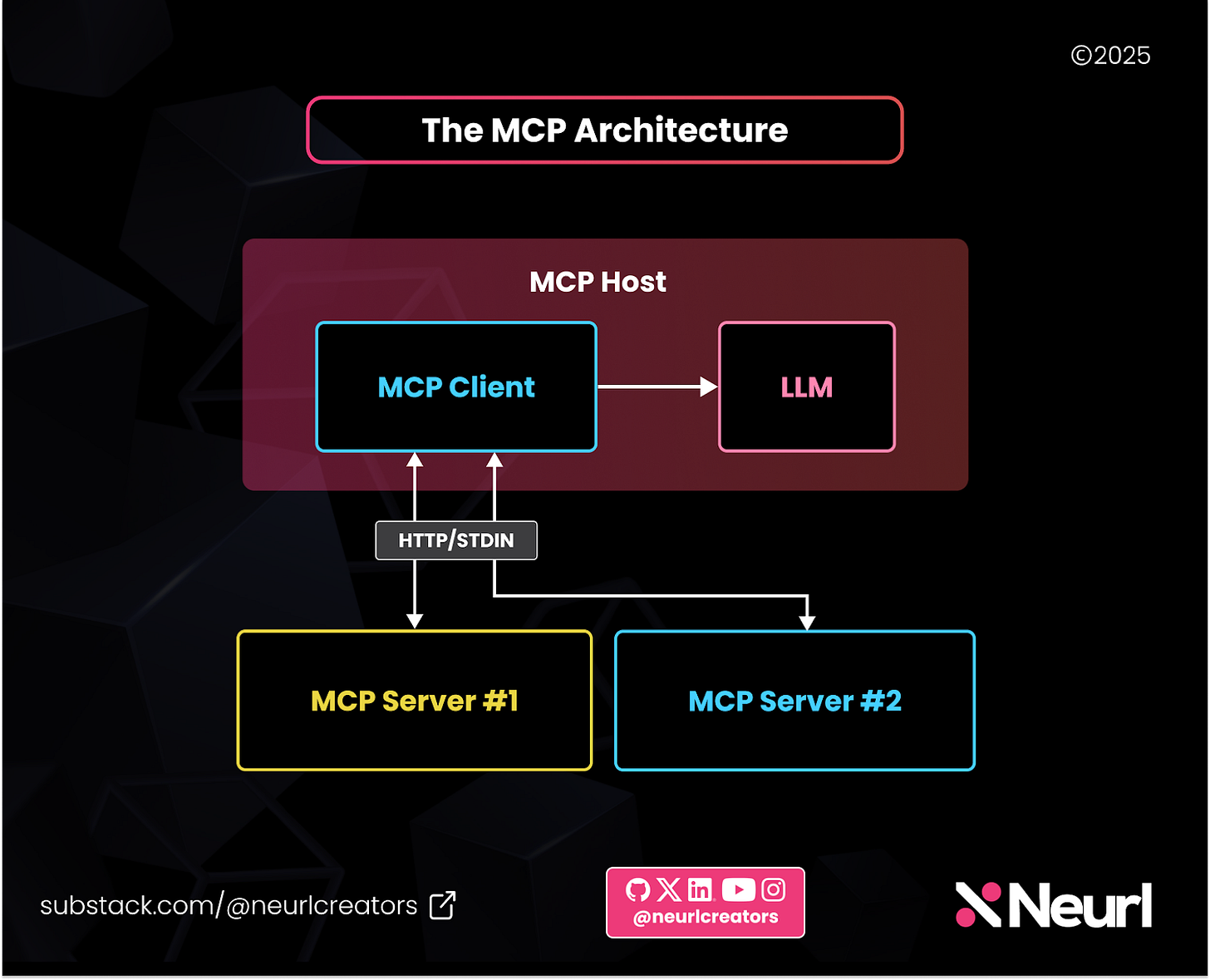

In Part 1 of this series, we broke down the core components of MCP:

The Host (where the model lives)

The Client (that mediates communication)

The Server (where tools, resources, and prompts live)

The Transports (local or remote) that glue everything together

We used diagrams and examples to explain how MCP decouples agent intelligence from tool execution, letting you mix and match servers, LLMs, and languages — all through a clean, open protocol.

Now it’s time to build it. In this tutorial, you’ll:

✅ Build a complete MCP server using Python

✅ Connect it to a minimal MCP client over stdio

✅ Integrate Claude 3.5 Sonnet to act as the LLM brain

✅ Run a working REPL chatbot that uses real tools

✅ Swap in different tools (even from other languages) with zero refactoring

By the end, you won’t just understand how MCP works—you’ll have built a tool-using AI agent that can reason, respond, and interact with services just like the leading agents on the market.

Let’s dive in and bring this architecture to life. 👇

🛠 Let’s Build a Simple MCP Server (with Python)

Let’s build a simple MCP server using Python. We'll include all three types of services—tools, resources, and prompts—and connect to it with a minimal MCP client.

We'll start by creating a simple server using the official Python SDK that exposes:

A tool to get the current time in any timezone

A resource that shares static info

A prompt for refactoring code

🔧 Step 1: Install the MCP SDK

MCP currently provides an official Python SDK, which supports both client and server development. The SDK is available in several languages, including TypeScript, Java, and Kotlin, but for this tutorial, we will focus on the Python version.

Install it with:

pip install mcp🧪 Note: This tutorial assumes Python 3.9+ (for zoneinfo support). You may need tzdata if you’re on Linux or macOS.

🏗️ Step 2: Create the Server File

Let’s create a new file called server.py. MCP provides two approaches for building servers:

Server: a low-level API for full control

FastMCP: a high-level wrapper (we’ll use this API to define our server and its services for simplicity)

Start by setting up the server:

# server.py

from mcp.server.fastmcp import FastMCP

# Create a FastMCP server instance

mcp = FastMCP("Tutorial")Now that we have our server instance, let's add tools to it.

🧰 Step 3: Add a Tool to the MCP Server—get_time_in_timezone

Creating a tool in MCP is as easy as defining an endpoint in FastAPI. Before writing the tool’s function, let’s decide what it should do. To keep things simple, we’ll create a tool that takes a time zone as input and returns the current time in that region.

To define a tool, we:

Write a function that implements the desired functionality.

Use the @mcp.tool decorator to mark it as an MCP tool.

Use type hints to specify input and output types (which MCP will automatically recognize).

Define a tool that takes a timezone string and returns the current time in that zone:

from datetime import datetime

from zoneinfo import ZoneInfo

@mcp.tool()

def get_time_in_timezone(timezone: str) -> str:

"""Returns the current time for a given timezone."""

try:

tz = ZoneInfo(timezone)

current_time = datetime.now(tz)

return current_time.strftime("%Y-%m-%d %H:%M:%S %Z%z")

except ValueError:

return "Invalid timezone. Try something like 'America/New_York'."🧠 What’s happening?

The

@mcp.tool()decorator registers the function with the MCP server and makes it callable by agents. Type hints help MCP automatically expose the input/output schema.You can define as many tools as you want. Each one becomes callable from any MCP-compatible client.

📂 Step 4: Add a Resource

Resources are like files or data blobs that an LLM might need access to.

Defining a resource follows a similar process as defining a tool. The main difference is that the @mcp.resource decorator requires a URI, which clients will use to fetch the resource.

For example, if we want to provide a resource containing a sample text:

@mcp.resource("info://resource")

def get_resource():

"""Get a resource from the server."""

return "This is a sample resource from the MCP server."The string "info://resource" is a URI for the AI agent to query this endpoint. @mcp.resource() makes static or dynamic data available.

✍️ Step 5: Add a Prompt

Prompts are reusable instruction templates stored server-side. You can define a prompt function without arguments, in which case it simply returns a static prompt.

Alternatively, it can accept arguments, making it a dynamic prompt template that adapts based on the provided input.

Here’s how to define one:

@mcp.prompt()

def refactor_prompt(code: str) -> str:

"""Prompt to refactor the given code snippet."""

return f"Please refactor the following Python code to improve readability and follow best practices:\n\n{code}"

@mcp.prompt()→ “Stores reusable prompts or templates”

🚀 Step 6: Run the MCP Server

Now, run the server using the stdio transport. This allows the server to be invoked directly by a client as a subprocess.

if __name__ == "__main__":

mcp.run(transport="stdio")📌 Unlike traditional web servers, an MCP server launched with stdio is executed as a subprocess by the MCP client.

Save the full server code in server.py. ✅

You can find the complete code here.

🛠️ Server Debugging Tip

If your MCP server doesn't start correctly or exits immediately:

Confirm you've properly decorated your tools, resources, and prompts with @mcp.tool(), @mcp.resource(), and @mcp.prompt()

Make sure mcp.run(transport="stdio") is inside a __main__ guard

Check that there are no import errors (e.g., ZoneInfo requires Python 3.9+)

Add print/log statements above mcp.run() to verify the script is executing

Next, we’ll create a script that acts as the client and connects to our server via STDIO.

🤖 Let’s Build a Simple MCP Client

Create a new file called client.py.

The client will:

Launch server.py via stdio

Connect and start a session

List available services

Invoke each service and print results

⚠️ Heads up!

Make sure your

server.pyfile and this client script are located in the same directory.If not, update the command or args in StdioServerParameters to point to the correct file path.

Our MCP client will communicate with the server via stdio. To establish this communication, we need three key components:

StdioServerParameters: Defines the command used to run the server script.stdio_client: Takes in the server parameters and returns read and write streams for communication.ClientSession: Creates a session between the client and server.

🧾 Step 1: Setup Parameters and Session

# client.py

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

# Set server launch config

server_params = StdioServerParameters(

command="python",

args=["server.py"],

)🔄 Step 2: Connect and Initialize a Session List Services

import asyncio

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

# List services

print(await session.list_prompts())

print(await session.list_resources())

print(await session.list_tools())Both stdio_client and ClientSession run within an asynchronous context, so they need to be executed inside an async block.

💬 Step 3: Invoke Services

Within a session, you can perform various tasks, such as listing all available tools, resources, and prompts.

Directly invoke services from the client using their corresponding methods:

get_prompt: Retrieves a stored or templated prompt.read_resource: Fetches a specific resource.call_tool: Executes a tool function.

# Call prompt

prompt = await session.get_prompt(

"refactor_prompt", arguments={"code": "print('Hello World')"}

)

print(prompt)

# Read resource

content = await session.read_resource("info://resource")

print(content)

# Call tool

result = await session.call_tool(

"get_time_in_timezone", arguments={"timezone": "America/New_York"}

)

print(result)🏁 Step 4: Run the Client

if __name__ == "__main__":

asyncio.run(run())Wrap the session inside an asynchronous function and use the asyncio library to execute it.

You can find the complete code here.

💥 Troubleshooting Tip

If the client hangs or fails to connect:

Ensure server.py exists in the same directory and is executable

Make sure you're using Python 3.9+ (for zoneinfo)

Check that the mcp SDK is installed in your current environment

Add print() logs in server.py to verify that it’s running

✅ You Did It!

You’ve now built a complete MCP server with tools, resources, and prompts—and connected it to a working MCP client via STDIO.

Next up: you’ll transform this into a full MCP Host by integrating it with an LLM.

🚀 Building an MCP Host: Claude + Tools = Magic

Let’s now go one step further and turn our MCP client into a fully functional MCP host by adding an LLM into the loop. An MCP host includes:

An MCP client (already built),

A large language model (Claude, in this case),

And optionally, any extra app logic—a UI or interface (we’ll use the command line).

In this section, you will integrate the Claude 3.5 Sonnet model to power a simple command-line chatbot that can automatically call tools from the MCP server when needed.

🔧 What We’re Building

You'll create:

An interactive REPL-style chatbot powered by Claude

A tool-aware LLM agent that auto-calls get_time_in_timezone() via MCP

A flexible host that can connect to any MCP server—even ones written in Node.js or other languages

We'll focus on tools only for simplicity. Resources and prompts work similarly; they can be added later.

📂 File Structure

Here’s the current file structure, which should help when you’re testing multiple server/client setups (e.g., local and Node.js-based):

.

├── server.py # Your MCP server

├── client.py # Your MCP client

└── host.py # Claude host📦 Pre-requisite: Set Up Claude and MCP SDK

Before continuing, make sure you’ve installed the necessary libraries:

pip install mcp anthropicSet your Claude API key as an environment variable:

export ANTHROPIC_API_KEY='sk-...'🛠️ Note: Claude's SDK uses API keys stored in os.environ['ANTHROPIC_API_KEY'] by default.

🧠 How It Works (Before Code)

User types a prompt in the REPL

Claude receives the prompt along with the list of available tools

If it detects a tool is needed, it includes a tool_use directive in the response

Your host calls the tool via MCP

The tool output is returned to Claude to finish the reply

✅ Step 1: List Available Tools

Define list_available_tools() to fetch the list of tools from the MCP server and prepare them for Claude.

async def list_available_tools(session):

"""Retrieve and format the list of available tools."""

try:

tool_list = await session.list_tools()

return [{

"name": tool.name,

"description": tool.description,

"input_schema": tool.inputSchema

} for tool in tool_list.tools]

except Exception as e:

print(f"Error fetching tool list: {e}")

return []📘 We fetch tools from the MCP server and format them for Claude’s function calling interface.

🤖 Step 2: Send Prompt to Claude

Define get_claude_response() to send messages + tool schema to Claude and return the response.

from anthropic import Anthropic

anthropic = Anthropic()

async def get_claude_response(messages, tools):

"""Fetch a response from Claude AI."""

try:

return anthropic.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1000,

messages=messages,

tools=tools

)

except Exception as e:

print(f"Error fetching Claude response: {e}")

return None⚠️ Note: The Claude SDK is synchronous, meaning it does not use await. You might need to wrap it in a thread executor or clarify that this call is blocking

If you're using it inside an async function (as we are), you may want to offload the call using an executor to avoid blocking the event loop:

import asyncio

loop = asyncio.get_event_loop()

response = await loop.run_in_executor(None, lambda: anthropic.messages.create(...))🛠 Step 3: Handle Tool Calls

You need to execute a tool request using the MCP session and return the result. Define a function that will trigger if Claude’s response includes a tool call:

async def handle_tool_use(session, content):

"""Execute a tool call and return the result."""

try:

result = await session.call_tool(content.name, content.input)

return {

"tool_use_id": content.id,

"content": result.content[0].text if result.content else "No response from tool."

}

except Exception as e:

print(f"Error executing tool {content.name}: {e}")

return {

"tool_use_id": content.id,

"content": result.content[0].text if result.content else "No tool output."

}💬 Step 4: Build the Interactive Chat Loop

Define chat() to run the REPL loop and coordinates tool invocation and response generation:

async def chat(session):

"""Run the interactive chat loop."""

messages = []

tools = await list_available_tools(session)

while True:

user_input = input("\nProvide your prompt (or type 'exit' to quit): ").strip()

if user_input.lower() == "exit":

print("Exiting chat.")

break

messages.append({"role": "user", "content": user_input})

response = await get_claude_response(messages, tools)

if not response or not response.content:

print("Error: No response from Claude.")

continue

final_text = []

assistant_message_content = []

for content in response.content:

if content.type == "text":

final_text.append(content.text)

assistant_message_content.append(content)

elif content.type == "tool_use":

tool_result = await handle_tool_use(session, content)

assistant_message_content.append(content)

messages.append({"role": "assistant", "content": assistant_message_content})

messages.append({"role": "user", "content": [{"type": "tool_result", **tool_result}]})

# Get new response from Claude after tool execution

response = await get_claude_response(messages, tools)

if response and response.content:

messages.append({"role": "assistant", "content": response.content[0].text})

final_text.append(response.content[0].text)

print("\nAssistant:", "\n".join(final_text))Claude Tool Use Flow:

🧠 Claude detects a tool → Sends tool_use → You respond with tool_result → Claude completes reply

🔁 Step 5: Bring It All Together

Create your REPL host as host.py and structure your entrypoint like this:

import sys

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

async def run(server_params):

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

await chat(session)

async def main():

if len(sys.argv) < 2:

print("Usage: python host.py <server_command> [args]")

sys.exit(1)

server_params = StdioServerParameters(

command=sys.argv[1], args=sys.argv[2:]

)

await run(server_params)

if __name__ == "__main__":

import asyncio

asyncio.run(main())run()+ main() bootstraps the stdio connection to your MCP server and launches the chat loop.

You can find the complete code with all necessary imports here.

⚠️ Troubleshooting Tip

If your host script crashes, hangs, or fails to respond:

✅ Ensure your Anthropic API key is correctly set as an environment variable (ANTHROPIC_API_KEY)

✅ Verify that server.py exists and runs without errors

✅ Check that both mcp and anthropic Python packages are installed

✅ Make sure Claude is available in your region and supports tool use

🧪 Add print() logs before and after await session.initialize() or in the REPL loop to track where the error occurs

💡 Run python server.py on its own to make sure the server starts cleanly

🧪 Testing Your Host

Save the host script as host.py, and make sure server.py is in the same folder.

Run your new host like this:

python host.py python server.pyYou should see a REPL like this:

Provide your prompt (or type 'exit' to quit): What is the time in Dubai?

Assistant: I’ll use the get_time_in_timezone tool...

The time in Dubai is 12:58 PM (UTC+4).🔁 Connecting to Other MCP Servers

Now comes the fun part—language-agnostic interoperability.

Instead of using your Python server, you can connect to the File System MCP server written in Node.js:

python host.py npx -y @modelcontextprotocol/server-filesystem .Now, your Claude-powered chatbot can:

Browse directories

Read local files

Interact with any tool exposed via that server

✅ What You Just Built:

A Claude-powered chatbot that can dynamically call external tools

A host that follows the MCP protocol over stdio

A flexible system where you can swap tools in and out with minimal changes

💡 Why This Matters

This is the real power of MCP:

One agent

Modular tooling

Multi-language compatibility

Unified protocol

Real-time LLM integration

Whether it’s local Python servers or remote Node.js tools, you can now build agents that reason, retrieve, and act with modularity and interoperability baked in. And, oh, you don’t have to rewrite your host logic.

🚀 Wrapping Up

Phew! You’ve gone from understanding the Model Context Protocol (MCP) to actually building and running a fully functional AI host powered by Claude and the Model Context Protocol—complete with a custom server, REPL interface, and real-time tool integration.

In this tutorial, we built:

✅ A fully functioning MCP server with tools, resources, and prompts

✅ An MCP client that communicates over stdio

✅ A complete MCP host that integrates Claude to reason, call tools, and generate intelligent responses

✅ A chat interface that works with any MCP-compatible server, even across languages like Node.js

With just a few files and the right abstractions, you now have a blueprint for building tool-augmented AI agents using open standards.

🔍 What makes MCP so compelling is its modularity, reusability, and language-agnostic design. You write a tool once—and any AI agent that speaks MCP can use it.

So whether you're designing custom IDEs, command-line copilots, voice interfaces, or agentic backends, MCP gives you the plumbing to scale.

⏭️ Ready to Take the Next Step?

Here are a few ideas to extend what you've built:

🔌 Add new tools (e.g., search, math, summarization) to your MCP server

🌐 Try running the client in a remote transport mode (HTTP + SSE)

🤖 Swap Claude with OpenAI’s GPT models using function calling

🧪 Explore the official MCP docs and MCP GitHub for third-party servers.

Or try building your own MCP server to expose a database, search engine, or internal tool to an LLM agent.

The future of AI agents isn’t just about better models—it’s about better context, better tools, and better protocols.

Let’s build it. ⚡

💬 What Will You Build?

If you take this further or remix the example into something cool, we’d love to see it.

Tag us on X/Twitter @neurlcreators or drop a link in the comments on Substack. Let's build the next wave of agent-native applications—together.

🛠️ Talk to us at Neurl LLC to help you create high-quality, high-impact, and targeted content for your growth team.