🛠️ BuildAIers Talking #4: Fixing Bad Annotations, SQL Agents, Auto-Training Pipelines, Phi-4, and the A2A vs MCP Debate

Community Insights #4: From better transcription pipelines and SQL agents to Microsoft’s quiet model drop and the A2A vs. MCP agent protocol debate—here’s what AI builders are talking about this week

The discussions and insights in this post originally appeared in the MLOps Community Newsletter as part of our weekly column contributions for the community (published every Tuesday and Thursday).

Want more deep dives into MLOps conversations, debates, and insights? Subscribe to the MLOps Community Newsletter (20,000+ builders)—delivered straight to your inbox every Tuesday and Thursday! 🚀

Each week, the MLOps Community Slack is buzzing with insights from AI engineers, researchers, founders, and practitioners solving real-world problems.

Not everyone has time to scroll Slack all day (even if we’d love to). So we’ve done the work for you.

In this edition, we spotlight five conversations that dig into the hard questions AI teams are facing in 2025:

The real challenge of fixing bad annotations (and who to hire)

Problems with building and using analytics agents. Hint: bad schemas

What’s the best way to productionize deep learning training jobs?

Is Phi-4 the best open-weight model today?

Google A2A vs. MCP: Agent protocol war or power duo?

Ready to catch up? Let’s jump in 👇

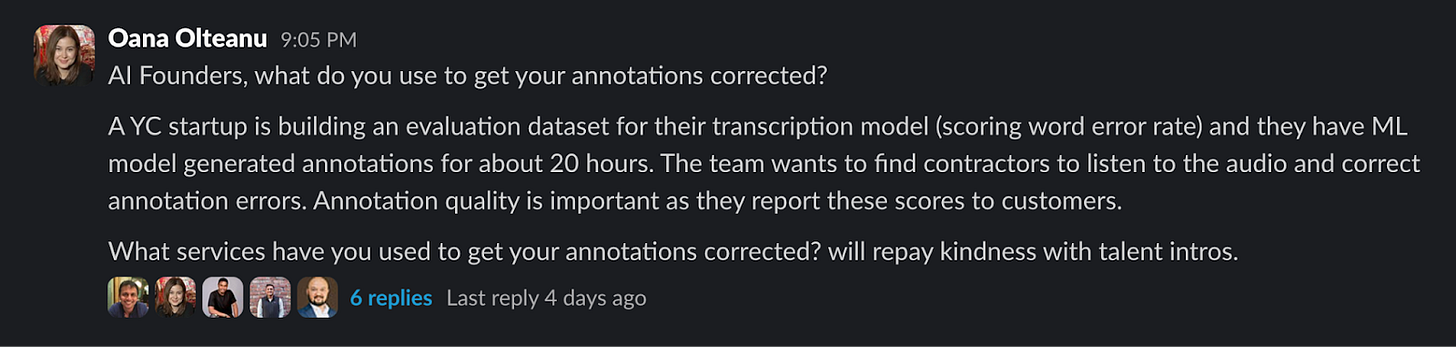

🎧 What’s the Best (and Cheapest) Way to Correct Model Annotations at Scale?

Channel: #mlops-questions-answered

Asked by: Oana Olteanu

A YC startup is building an evaluation dataset for a transcription model and needs to correct 20+ hours of ML-generated annotations—on a budget. Oana asked the community what services or hacks people are using to improve annotation quality for audio data.

Key Insights:

Anusheel Bhushan recommended e2f for human-in-the-loop transcription.

Joshua Obwengi pointed to Label Your Data, another known vendor in this space.

Vivek Katial shared a DIY path—build a Gradio app and let your team label internally, or hire students for cheaper labor.

Ali Mukhamadiev suggested using an LLM as a first pass to reduce contractor workload or generate synthetic data to enrich your eval set.

Takeaway: High-quality annotation doesn’t always require expensive labeling teams. With the right combo of tools, in-house help, and smart LLM-assisted pre-processing, you can achieve solid results on a lean budget.

🧮 What’s the Hardest Part About Building a SQL/Analytics Agent?

Channel: #mlops-questions-answered

Asked by: Stan Shen

Stan asked a big one: Who’s building analytics or SQL agents—and what’s the hardest part? The community answered: it’s the semantics (schema understanding), not the SQL.

Key Insights:

Bartosz Mikulski joked that nobody documents their schemas, so SQL agents have to reverse-engineer them from service code. Ouch.

Médéric Hurier noted that while SQL agents work well in constrained domains, generalizing them is difficult without a semantic layer.

Demetrios shared a podcast with Donné’s insights on LLM-powered SQL querying.

Stan agreed—most agents need more help interpreting schema meanings to scale beyond narrow tasks.

Takeaway: Building analytics agents isn’t just about plugging in an LLM—it’s about untangling decades of undocumented schema chaos. SQL agents are powerful, but without schema documentation or a semantic layer, they risk hallucinating queries.

If your company hasn’t invested in a semantic layer yet, now might be the time. And until the schema documentation is solved, tools like Tableau Pulse or BigQuery’s LLM-native features might offer a safer shortcut to SQL agents.

⚙️ What’s the Easiest Way to Turn Deep Learning Scripts into Automated AWS Training Jobs?

Channel: #mlops-questions-answered

Asked by: Al Johri

Al Johri asked for tooling tips to automate deep learning fine-tuning jobs—he’s currently running scripts on raw EC2 and wants to move toward proper job orchestration, better observability, and support for PyTorch/Transformers.

Key Insights:

Rafał Siwek suggested trying Ray for minimal code refactoring and solid dashboards. ZenML was also mentioned but may feel heavy for small teams.

Zach shared love for the Metaflow + Argo Workflows combo on Amazon EKS, citing handy decorators and seamless integration with MLflow.

Phil Winder said SageMaker Pipelines are solid if you want to go fully managed—even if they’re pricier.

Prasad Paravatha added that Kubeflow recently improved its PyTorch/DeepSpeed support with a new Python SDK.

raybuhr demystified SageMaker training job setup—it’s just pointing the SDK to your training scripts and letting it handle S3 + containers.

Takeaway: If you’re already on AWS, SageMaker is the lowest-friction path. But if you want fine control or open-source flexibility, Metaflow, Ray, or Argo are great next steps to production-grade training automation.

Whether open-source or managed service, the key to automation is picking a stack that plays well with your existing scripts—and tracks everything for reproducibility.

🧠Could Microsoft’s Phi-4 Multimodal Instruct Be the Best LLM You’ve Never Heard Of?

Channel: #llmops

Asked by: Ben Epstein

Ben flagged a model that seems to have flown under the radar: Phi-4 Multimodal Instruct. His initial tests were promising but not perfect.

Key Insights:

Ben tested it immediately: decent diarization, questionable transcription accuracy, but super fast on an L40s.

Patrick Barker hadn’t heard about it either but recommended tuning it for better performance.

Ben said it’s not worth tuning for his current use case, but it's already the best open-weight model available today for his needs.

Surprise: Microsoft didn’t hype the release, but it’s quietly outperforming many open-weight models out there.

Takeaway:

Keep an eye on Hugging Face—not all breakthroughs are loudly marketed. Phi-4 may not replace commercial APIs today, but it’s worth testing—especially for teams focused on speed and flexibility (if you’re running on local GPUs).

🤖 MCP vs A2A: Who’s Going to Win the Agent Infrastructure Wars?

Channel: #discussions

Asked by: Mirco Bianchini

Google dropped A2A as a protocol for agent-to-agent communication. Anthropic already has MCP. So is this the beginning of the AI protocol wars with Anthropic’s MCP? Or are they complementary tools for different layers of the agent stack?

Key Insights:

David & Médéric Hurier: A2A and MCP solve different layers—A2A handles communication between agents, while MCP governs how agents interact with tools/resources.

Meb: MCP should be seen more as a plugin interface, while A2A addresses hosted agents. Lack of SDKs in A2A is a downside—MCP is more mature there.

Sammer Puran linked to an article that you might find interesting: MCP vs. A2A: Friends or Foes.

Rahul Parundekar raised concerns about trust—do we want black-box agent interactions deciding things in enterprise environments?

Takeaway:

A2A and MCP aren’t direct competitors (yet), but as the AI agent stack evolves, security, open SDKs, and tool compatibility may tip the balance.

So don’t get caught in the protocol crossfire—understand what each protocol does best. This is particularly important as players such as OpenAI, Anthropic, and Google are striving for standardization in agent infrastructure.

Use MCP to power your agents and A2A to get them talking.

🎉 That’s all for this edition! Know someone who would love this? Share it with your AI builder friends!

We created 🗣️ BuildAIers Talking: Community Insights 🗣️ to be the pulse of the AI builder community—a space where we share and amplify honest conversations, lessons, and insights from real builders. Every Thursday!

📌 Key Goals for Every Post:

✅ Bring the most valuable discussions from AI communities to you.✅ Foster a sense of peer learning and collaboration.

✅ Give you and other AI builders a platform to share your voices and insights.

Thanks for reading The Neural Blueprint: Practical Content for AI Builders! Subscribe for free to receive new, no-BS, practical content from builders for builders.