🛠️ Protocol FOMO, Databricks × Neon, Jules Agents, On-Prem LLM Stacks & Agent Framework Fatigue

Community Insights #7: AG-UI vs MCP, why Databricks grabbed Neon, doubts about Google’s Jules coding agent, vLLM/Ray Serve vs Nvidia Dynamo for on-prem GPUs, and whether agent frameworks are worth it.

Every other Thursday, we surface the most thought-provoking, practical, and occasionally spicy conversations happening inside the community so you never miss out—even when life gets busy.

As AI builders wrestle with infrastructure complexity, vendor lock-in, and a sea of agent frameworks, we’re watching the real-world trenches reveal what actually works—and what’s just marketing fluff.

In this edition of 🗣️ BuildAIers Talking 🗣️, here are the top five hot-topic conversations from the community so you can keep up without the endless scroll:

Do we really need another agent protocol?

Why did Databricks buy Neon—and what does it have to do with agents?

Is Google Jules the new Codex, or just another cloud-bound misfire?

What’s the most reliable open-source LLM serving stack in 2025?

What agent framework should you actually use in production?

Grab a coffee ☕ and dive in, learn what others are actually shipping, and decide what’s hype vs. helpful. 👇

🔌 Do We Really Need Another Agent Protocol?

Channel: #agents

Asked by: Demetrios

Yet another agent protocol—AG-UI—entered the scene, prompting the community to ask: Is this truly adding something new, or just protocol FOMO?

Key Insights:

Meb: “Why not stick to OpenAPI + auth? MCP already covers plugins—don’t reinvent the wheel.”

Sammer Puran: Maybe useful for legacy UIs that lack APIs, but tooling support is thin.

Patrick Barker: “Protocol FOMO is real—do we need an AgentOps standard next?”

Shubham shared a blog post critiquing MCP bloat but agreed adoption, not perfection, wins standards wars.

Takeaway:

Before jumping on the next shiny spec, ask what it does that OpenAPI + MCP/A2A + auth gateways don’t. Right now, AG-UI is more “interesting” than “production-ready.”

🚀 Why Did Databricks Buy Neon? Is This an Agent Infra Play?

Channel: #discussions

Asked by: Will Angel

Databricks recently acquired Neon, and that was not on our bingo card. Why is a company best known for Spark and data lakes entering the agent and Postgres database space?

Key Insights:

Ross Katz pointed to vertical integration. “Postgres for RAG is gaining ground,” and Databricks wants the full stack.

Cody Peterson added: agents often use OLTP stores, and Neon gives Databricks a scalable Postgres option. Bonus: it’s a hedge against MotherDuck.

Patrick Barker dropped an interesting stat: Over 80% of new databases on Neon were created by agents.

Qian Li shared that OpenAI runs core infra on Postgres—proof it’s becoming an AI-native DB backbone.

Médéric Hurier argued Databricks needs to evolve fast to compete with full-stack clouds like GCP and AWS in the GenAI space.

Takeaway:

This move signals that Postgres isn’t just surviving—it’s becoming mission-critical for AI workflows. It is fast becoming the memory layer of AI apps. Databricks may be betting on agents to become the new OLTP workload.

🔗 Dive deeper into the acquisition →

💻 Could Google's new "Jules" coding agent replace your IDE?

Channel: #agents

Asked by: Meb

Google released Jules, an asynchronous coding agent—but does it make sense for developers who prefer local IDEs?

Key Insights:

Cody Peterson said the cloud-only design is a non-starter: “If you can’t run agents locally, dev experience suffers.”

Meb compared it to Firebase—great demo, poor mainstream adoption: “Link GitHub, run in Google Cloud, pay per run? Hard pass.”

Patrick Barker: “Any code generator that isn’t inside my IDE is insane.”

Médéric Hurier questioned whether Google can compete on developer UX: “Besides Chrome, desktop apps aren’t their strong suit.”

Takeaway:

Dev experience still matters. Unless cloud agents deliver massive upside, devs still prefer tools that run where they code—even if that means fewer managed bells and whistles.

🔗 Explore community reactions to Jules →

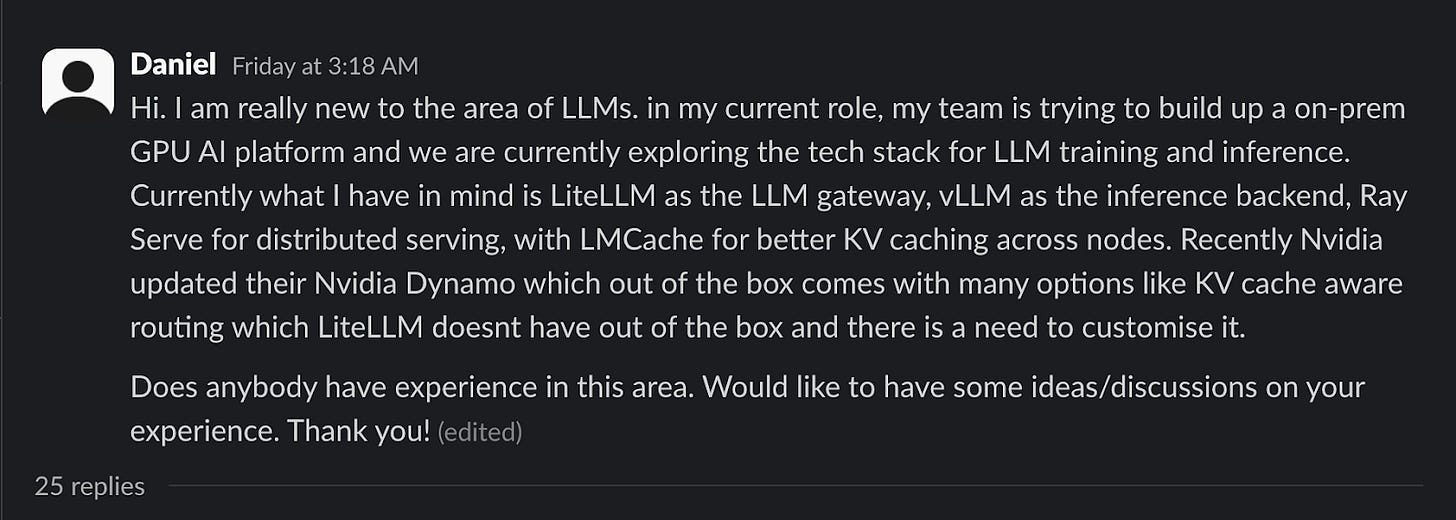

🚀 What’s the Best DIY Stack for On-Prem LLM Serving?

Channel: #llmops

Asked by: Daniel

Daniel’s team is speccing an on-prem GPU stack: LiteLLM → vLLM → Ray Serve (+ LMCache). Would you consider NVIDIA’s new Dynamo Inference engine a better bet?

Key Insights:

Patrick Barker: vLLM + RayServe are rock solid; LiteLLM okay but Portkey is catching up; avoid k8s if you don’t own GPUs.

Shubham: Cold-start pain with Ray autoscaling; SkyPilot and llm-d project are promising alternatives (hardware-aware autoscaling, baked-in best practices, disaggregated serving).

Ben Epstein mentioned Modal for on-demand GPUs; lock-in depends on how you wire it.

Eli Mernit shared Beam Cloud—an OSS serverless GPU platform you can self-host.

Takeaway:

RayServe/vLLM remains the community’s default, but Nvidia Dynamo’s baked-in routing + cache might tempt teams who’d rather avoid stitching pieces.

Your call: decide first whether you need DIY flexibility or managed serverless speed (Modal, Beam), then pick tools that won’t stall on cold starts or quota walls—control vs. convenience.

🔗 Join the architecture chat →

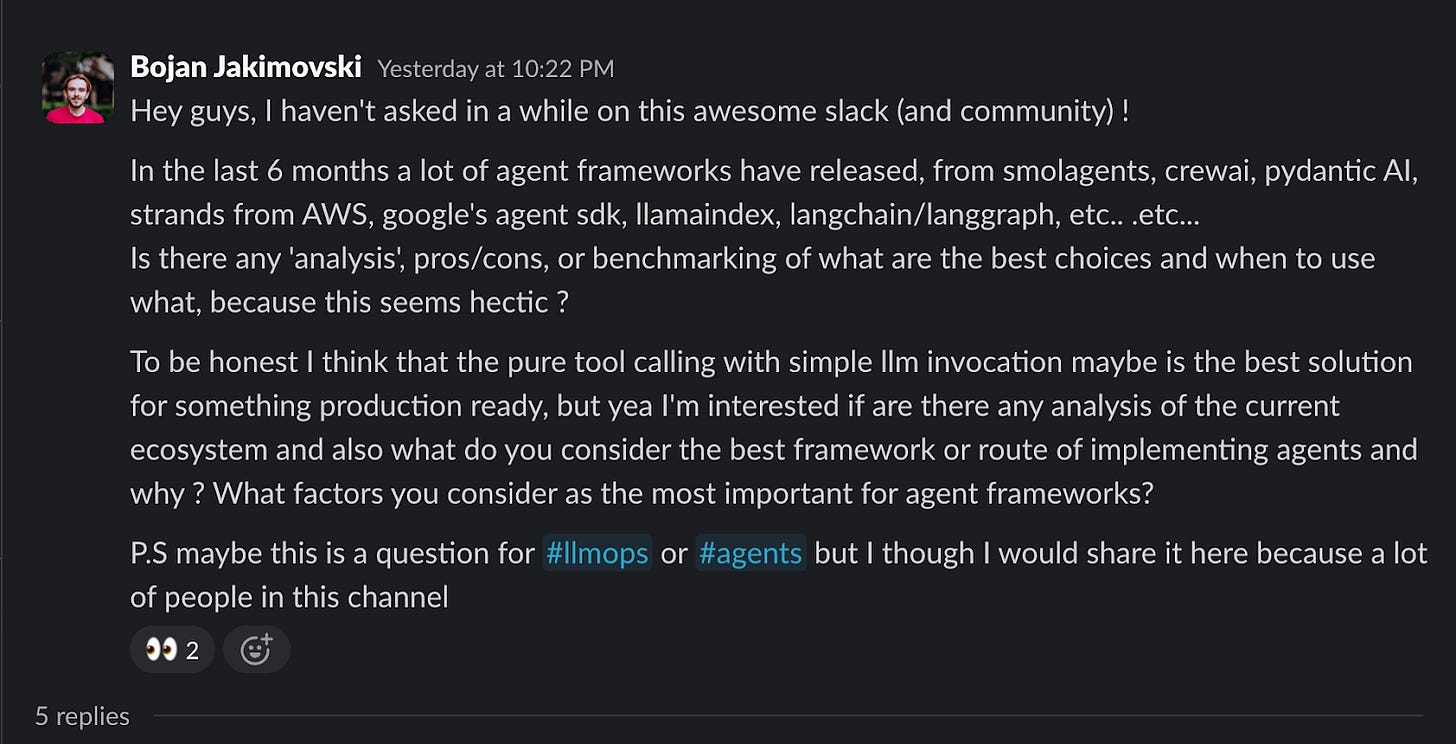

🤔 With 10+ Agent Frameworks, Which One Actually Belongs in Prod?

Channel: #mlops-questions-answered

Asked by: Bojan Jakimovski

Smol-agents, CrewAI, Pydantic AI, LlamaIndex, ADK, LangGraph… How do you choose—or do you need any of them at all?

Key Insights:

Patrick Barker: Most apps don’t need a framework—tool-calling + clean code wins. LangGraph is okay for some DAG-style flows; router libs (Portkey/LiteLLM) solve real problems.

Jude Fisher: Team ditched heavy frameworks—used Semantic Kernel lightly and built minimal orchestration on Azure Durable Functions for scale + serverless benefits (scale and cost).

Médéric Hurier: Google’s Agent Development Kit (ADK) finally “clicked” for modular agents + built-in GCP tools. Divide-and-conquer beats monoliths.

Common thread: Specialize agents, keep the glue minimal, and add frameworks only when they truly reduce complexity.

Takeaway:

Most frameworks are still immature. Lightweight, composable approaches (with good infra integration) are winning over bloated abstractions.

Pick a framework that matches your team's expertise, workflows, and project's scalability needs.

Many teams are opting for custom infrastructure or minimalist frameworks until the ecosystem matures.

Build Better AI Agents with MCP and OpenAI’s Agent SDK

TL;DR Model Context Protocol (MCP) offers a way to extend large models with tools and resources

Which community discussions should we cover next? Drop suggestions below!

We created ⚡ BuildAIers Talking to be the pulse of the AI builder community—a space where we share and amplify honest conversations, lessons, and insights from real builders. Every other Thursday!

📌 Key Goals for Every Post:

✅ Bring the most valuable discussions from AI communities to you.✅ Build a sense of peer learning and collaboration.

✅ Give you and other AI builders a platform to share your voices and insights.

Know someone who would love this? Share it with your AI builder friends!