Is LangChain Still Worth Using in 2025?

BuildAIers' Toolkit #10: Does LangChain Still Have a Place in Today’s LLM Workflows?

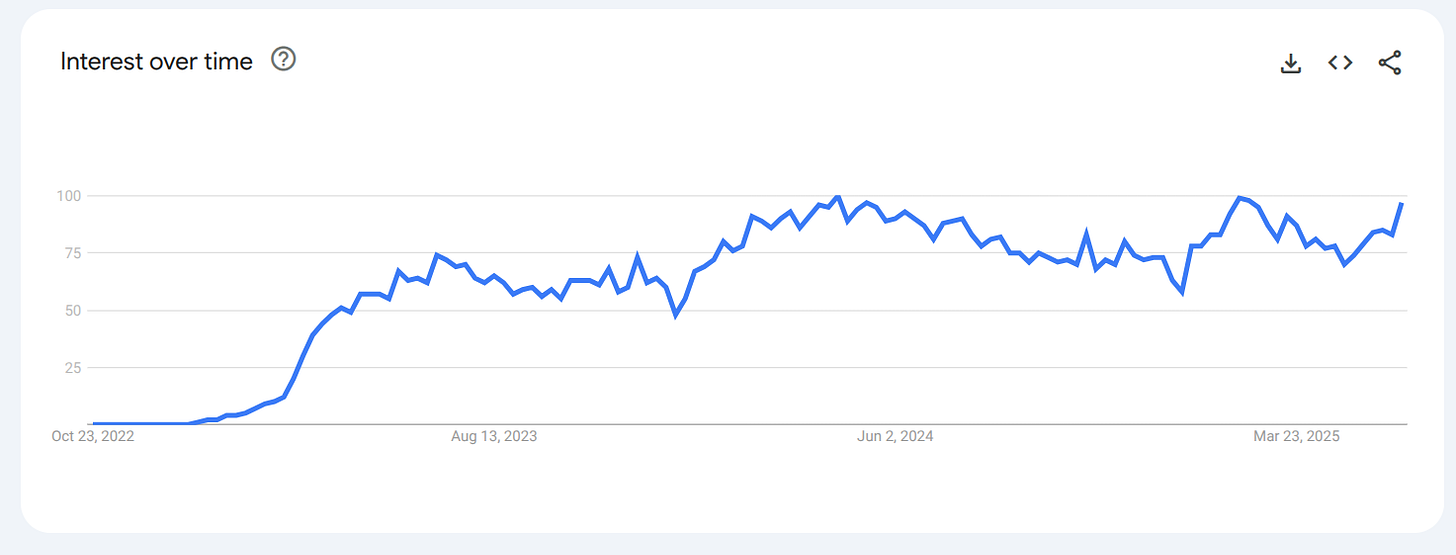

In late 2022, something unexpected took the AI development world by storm, and no, it wasn’t ChatGPT. It was LangChain. On October 24, 2022, Harrison Chase made the first commit to the LangChain repository, introducing a framework like nothing the software industry had seen before.

At the time, language models were isolated systems. They took in text and returned text, nothing more. There were no built-in tools, no retrieval mechanisms, no memory. LangChain emerged to solve this problem by offering a way to orchestrate LLMs with tools, prompts, memory, and retrieval pipelines.

But 2022 feels like a lifetime ago.

Since then, the LLM landscape has changed dramatically. Language models no longer operate in isolation. Major providers now offer built-in capabilities like tool use, function calling, and external data access. Innovations such as OpenAI’s function calling and Anthropic’s Model Context Protocol (MCP) have drastically reduced the limitations that LangChain originally set out to overcome.

So the question now is: Is LangChain still needed in 2025?

In this article, we’ll explore that question by comparing LangChain to the latest capabilities of the OpenAI API. With the introduction of the Response API, OpenAI has brought orchestration features directly into the platform.

This article will help you understand how far LLM tooling has come and whether LangChain still deserves a place in your toolbox.

Here’s what we’ll cover:

Prompt management: How prompt handling compares between LangChain and OpenAI

Tool usage: How both platforms enable agentic behavior

Retrieval-Augmented Generation (RAG): LangChain’s stack compared to OpenAI’s native support

Multi-model support: How each handles access to different model providers

Structured outputs: LangChain’s output parsers versus OpenAI’s built-in response formatting

Let’s dive in.

🌱 LangChain's Origin Story

Back in 2022, when language model providers like OpenAI and Cohere first made their APIs publicly available, their capabilities were limited. You could send in a prompt and get back a response. Text in, text out. That was it.

While the APIs were simple, the research community was already exploring much more. Papers, tweets, and GitHub repositories were demonstrating how LLMs could be extended to perform advanced tasks like using calculators, searching the web, or reasoning step by step simply through clever prompting.

![A tweet from 2022 showing how to prompt GPT-3 to use a calculator [source: X] A tweet from 2022 showing how to prompt GPT-3 to use a calculator [source: X]](https://substackcdn.com/image/fetch/$s_!V8uI!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Faf1e9b4a-4c06-482e-8832-3770be4a5647_745x734.png)

Research like “Self-Ask” had working implementations, and developers were beginning to realize the hidden potential of LLMs. But all this knowledge was scattered across the internet.

That’s when Harrison Chase stepped in. He began collecting those ideas and turning them into a unified framework. What he was working on would soon become LangChain, the first full-featured LLM orchestration library.

At launch, LangChain supported just two providers: OpenAI and Cohere. But it allowed developers to do more than just send prompts. It enabled models to perform tasks like web search, math calculations, and more. These were two areas where LLMs struggled the most at the time: hallucinations and arithmetic.

Langchain didn’t get much attention until early 2023, when more developers jumped into the LLM space and quickly ran into the same limitations: hallucinations, weak reasoning, prompt engineering complexity, and lack of tooling.

![A user comment from the Patrick Loeber YouTube video on langchain released April 2023 [source: YouTube] A user comment from the Patrick Loeber YouTube video on langchain released April 2023 [source: YouTube]](https://substackcdn.com/image/fetch/$s_!JVdI!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F8963161d-c8d5-40f4-9ac2-ff8a96955f04_1141x123.png)

By this time, LangChain had rapidly expanded its feature set:

Composable chains for combining multiple LLM calls and tools

Retrieval-Augmented Generation (RAG) support

Memory integration for maintaining conversational context

Embedding model support for search and retrieval

For many developers, LangChain became the easiest way to start building serious LLM applications. It was a dream toolkit for rapid experimentation.

But as LangChain grew, so did the criticism. Many in the software community began to argue that LangChain was too complex, too abstract, and not production-ready. Some preferred the simplicity and control of using the OpenAI SDK directly.

On Reddit, Twitter, and forums, there was no shortage of frustration over its design choices.

![A user expressing their frustration with LangChain [source: discussion thread]. A user expressing their frustration with LangChain [source: discussion thread].](https://substackcdn.com/image/fetch/$s_!Wjw9!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F4df1bedb-0731-4c77-bb27-2d523b139f5f_1176x298.png)

This article isn’t about those criticisms.

Instead, we want to focus on a bigger question: Is LangChain still necessary in 2025?

LangChain was designed to orchestrate the complex workflows that LLMs couldn’t handle alone. But now, model providers themselves offer orchestration capabilities, including built-in function calling, retrieval, memory, and even agent-like behaviors.

So, where does LangChain fit in today’s LLM stack?

That’s what we’re here to explore.

🗂️ Prompt Management

One of the selling points of LangChain at the time was its ability to assist developers with prompting. LangChain had built-in prompts that could help developers achieve their goals, but now, come 2025, the act of “prompt engineering” is almost non-existent. This is mostly because of how language models have improved. Now, with just zero-shot prompting, you can get an LLM to achieve your task with high accuracy.

When LangChain first came out, its maintainers drew prompting techniques from the research community and carefully crafted new prompts. At the time, before OpenAI released the Chat Completions API and chat-tuned models became common, LangChain played a crucial role in simulating chat-style interactions through prompt templates like this:

"""The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

Current conversation:

{history}

Human: {input}

AI:"""

These types of prompt templates became less common with the introduction of the Chat Completions API.

Back then, prompts were also used to get models to use tools. But that changed when OpenAI introduced function calling, which quickly became the standard. As a result, more model providers began fine-tuning their models to support tool use through function calls, making it much easier for developers to integrate tools without relying on complex prompt patterns.

There was also a time when prompts like “think step by step” were widely used to guide reasoning, but with the rise of reasoning models, many LLMs can now guide themselves without relying on predefined prompt templates.

With all these improvements, developers today can leverage the power of LLMs more easily and effectively, without needing to depend as heavily on frameworks like LangChain.

🧰 Tool Usage

Tool use was one of the main reasons developers turned to LangChain when it first launched. At the time, language models couldn’t natively use tools, so LangChain filled the gap by offering a way to extend LLM capabilities through tool integration.

LangChain provided built-in support for connecting models to tools such as:

Web search (e.g., using SerpAPI or Bing Search)

Calculators for basic and complex math

Python REPLs for running code snippets

These integrations were orchestrated using agentic patterns like ReAct and MRKL, enabling models to decide which tool to use based on the conversation.

Now, LLM providers support tool usage natively:

Function Calling: Language models like those from OpenAI allow developers to define functions as schemas. The model can then decide when to use a function during execution.

Built-in Tools: Models from OpenAI, Google, and Anthropic increasingly support built-in tools such as search, code execution, and more.

MCP: Anthropic’s Model Context Protocol (MCP) provides a standardized way to extend language models with tools and additional context.

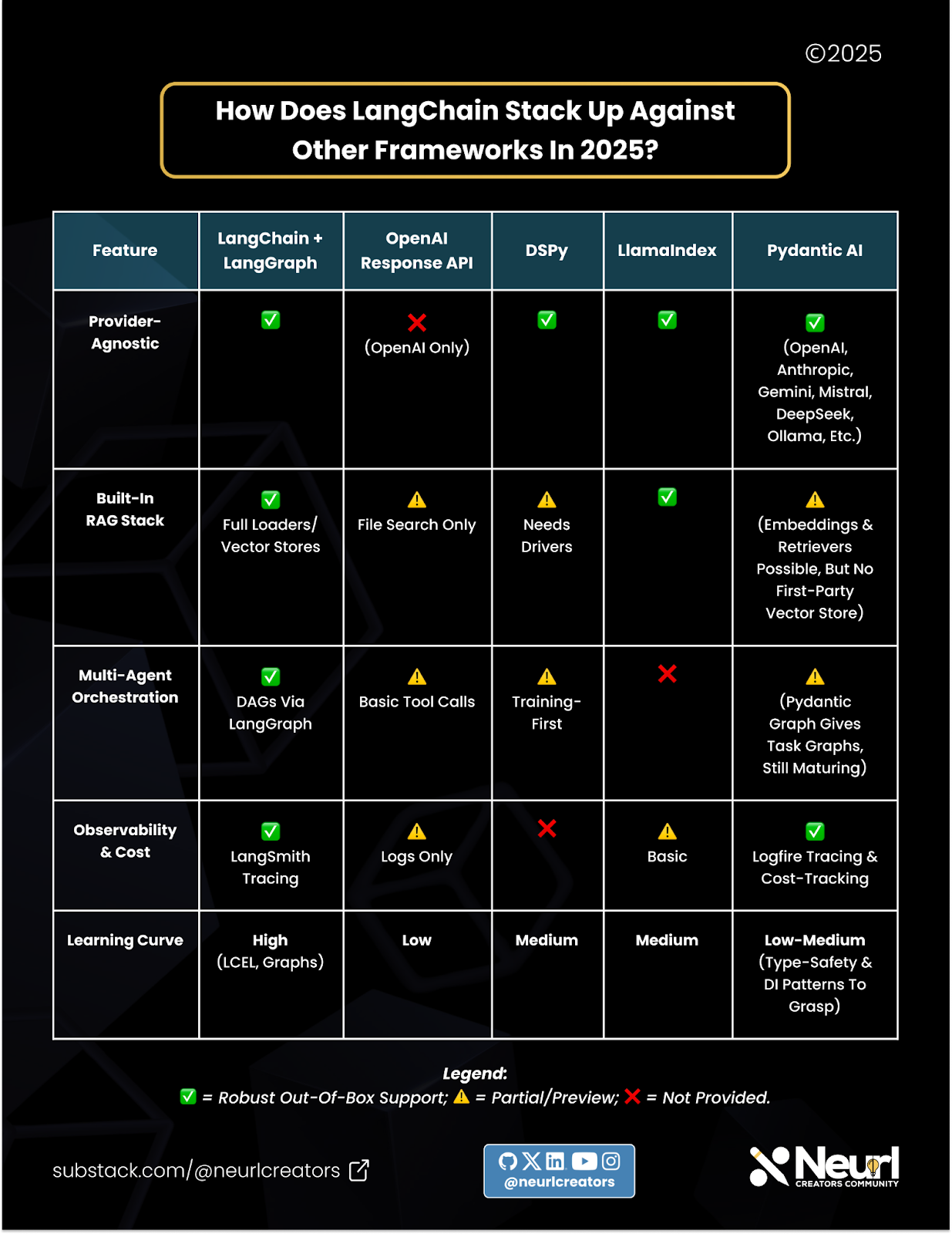

LangChain also supports function calling and MCP, and it offers a large collection of tools for developers to choose from. However, tool usage is no longer the framework’s main value proposition. For complex agentic workflows, even LangChain itself recommends using LangGraph instead of relying solely on the core framework.

🔍 Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation, or RAG, was one of the hottest topics in AI. Everyone wanted to connect their LLMs to external knowledge sources, largely due to the limited context length of models at the time. LangChain quickly became the go-to solution for this. It provided a complete RAG pipeline, including:

Loaders to pull data from external sources

Vector stores for storing embedded data

Retrievers for managing retrieval logic

Chunking strategies to break down documents into manageable pieces that fit within context limits

Later on, OpenAI introduced file search tool in their Assistant API (initially in beta) and eventually rolled it into the more stable Response API. This allowed users to upload files to OpenAI’s built-in vector store, enabling OpenAI to handle the entire retrieval process without requiring users to manage chunking, embeddings, or vector storage.

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-4o-mini",

input="What is deep research by OpenAI?",

tools=[{

"type": "file_search",

"vector_store_ids": ["<vector_store_id>"]

}]

)

print(response)While OpenAI’s approach is much simpler to use, it’s also less flexible. LangChain, on the other hand, gives developers full control over the RAG pipeline, including choice of vector store, chunking logic, retrievers, and more. This can be critical when working with non-OpenAI models or when you need fine-grained control over retrieval behavior.

Some may argue that the need for RAG has diminished with the advent of long-context models, but it still plays a vital role in many applications. And when it comes to building a customized RAG pipeline, LangChain remains a strong option.

🌐 Access to Multiple Model Providers

OpenAI was the dominant provider when language model APIs first became available. However, LangChain anticipated a future with multiple providers and built its framework to support them from the start.

As new providers entered the space, LangChain quickly integrated them, making it easy for developers to swap between models. This flexibility was one of the key reasons LangChain gained popularity early on.

Today, OpenAI’s own SDK supports multiple model providers, as long as they implement the OpenAI-compatible API standard. Providers like Google, Anthropic, and Ollama have adopted this approach, allowing developers to use their models seamlessly through a unified interface.

Some newer providers, like DeepSeek, didn’t even bother building a separate SDK. They simply conformed to the OpenAI spec from the start. This works well for both sides: model providers can focus on training and deploying their models, while users benefit from immediate access using tools they’re already familiar with.

In contrast, with LangChain, users often have to wait for official or community-built integrations to be added before they can access a new model, adding friction to the development process.

🧩 Structured Outputs

The output from LLMs was originally unstructured text, which made it challenging to parse and use reliably. LangChain addressed this by introducing output parsers, letting developers define expected structures and automatically interpret the model’s responses.

from pydantic import BaseModel, Literal

from openai import OpenAI

class SentimentResponse(BaseModel):

sentiment: Literal["positive", "neutral", "negative"]

client = OpenAI()

response = client.responses.parse(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "Classify the review sentiment."},

{"role": "user", "content": "The service was excellent!"}

],

response_format=SentimentResponse # schema ensures type-safe output

)

print(response.sentiment) # e.g., "positive"Today, structured outputs are supported natively by LLM providers including OpenAI, Google, and Anthropic.

🧑⚖️ Final Verdict: Is LangChain Still Worth Using in 2025?

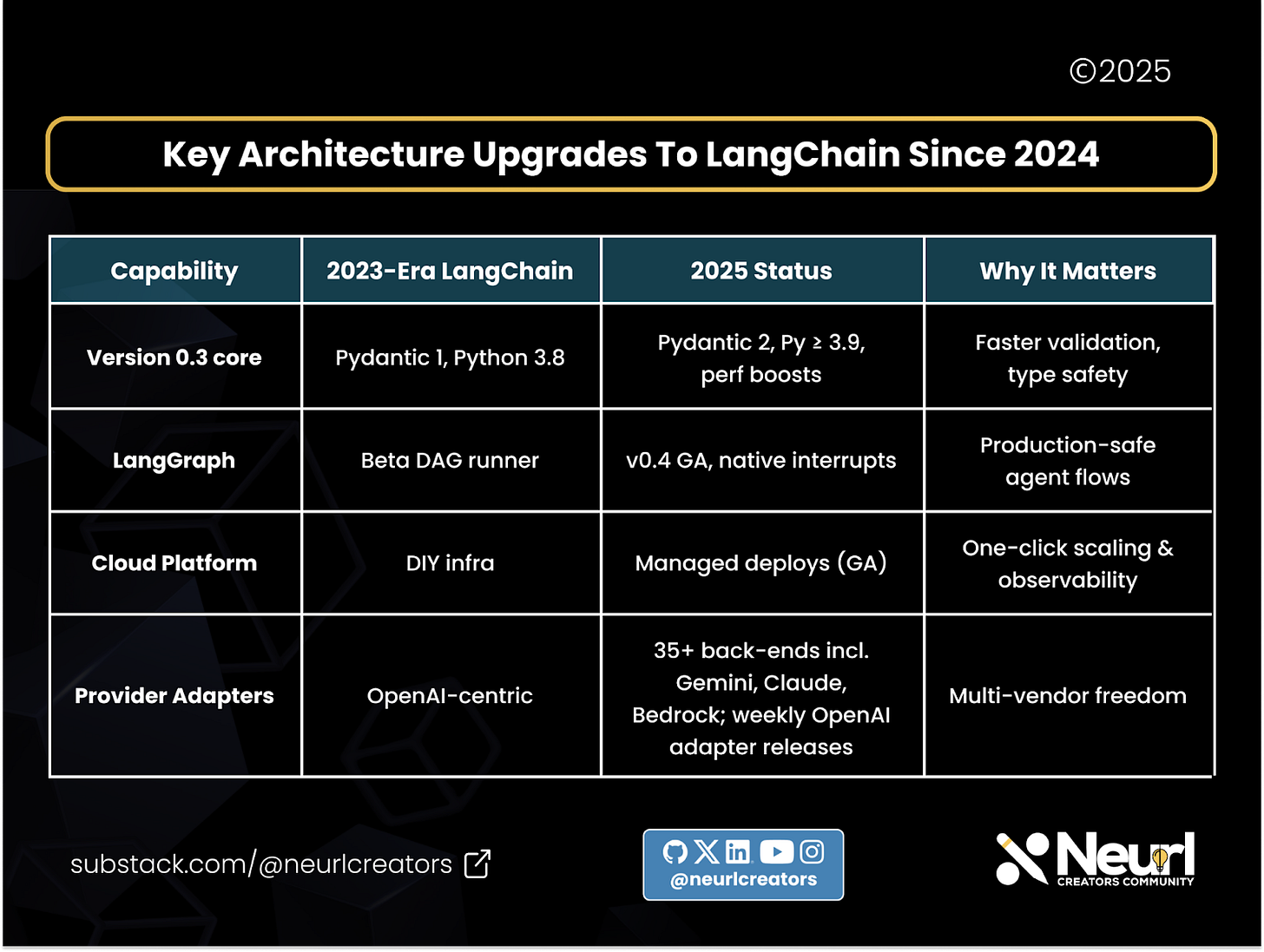

Absolutely. Despite some criticism and the fact that major providers like OpenAI now offer many of LangChain’s features out of the box, LangChain remains a powerful and relevant tool thanks to several key strengths.

Pros:

Open Source and actively maintained: LangChain continues to receive frequent updates and improvements from an active community.

Multi-model support: It supports smaller or open-source LLMs that may lack native features like function calling or structured output.

Strong RAG toolkit: LangChain offers a robust pipeline for retrieval-augmented generation, with built-in loaders, vector stores, and customizable retrieval logic.

Rich ecosystem: Beyond its core library, LangChain includes LangGraph for multi-agent workflows and LangSmith for observability and debugging.

Cons:

Steeper learning curve: Its extensive feature set can be overwhelming for beginners.

Complex abstractions: You’ll need to learn LangChain-specific concepts like LCEL.

Documentation navigation: The abundance of content and component layers can make the docs difficult to navigate

🔚 Final Take

When choosing an AI orchestration approach in 2025, you generally have three options:

Use an open-source orchestration framework like LangChain

Rely directly on a model provider’s SDK, such as OpenAI’s

Build your own orchestration layer from scratch

Each option comes with its own set of trade-offs, ranging from flexibility and control to simplicity and ease of integration. The good news is that, unlike the early days of LLMs, developers today have a rich ecosystem of choices to suit different project needs and levels of complexity.

What tools or stacks do you want us to break down next? Drop a comment.