🔐 Securing LLM Agents: How AI Teams Prevent Unauthorized Access & Data Leaks

🛠️ Community Insights #2: LLMs powering AI Agents are now handling sensitive data. How can AI teams prevent unauthorized access and enforce security in AI workflows?

The discussions and insights in this post originally appeared in the MLOps Community Newsletter as part of our weekly column contributions for the community (published every Tuesday and Thursday).

Want more deep dives into MLOps conversations, debates, and insights? Subscribe to the MLOps Community Newsletter (20,000+ builders)—delivered straight to your inbox every Tuesday and Thursday! 🚀

AI agents are becoming super important for businesses, but as they get more access to sensitive data, we need to figure out who controls what they can see.

It's like, we have rules for who can see what, but what about AI? Can they get around those rules or figure out confidential stuff just from being around the data?

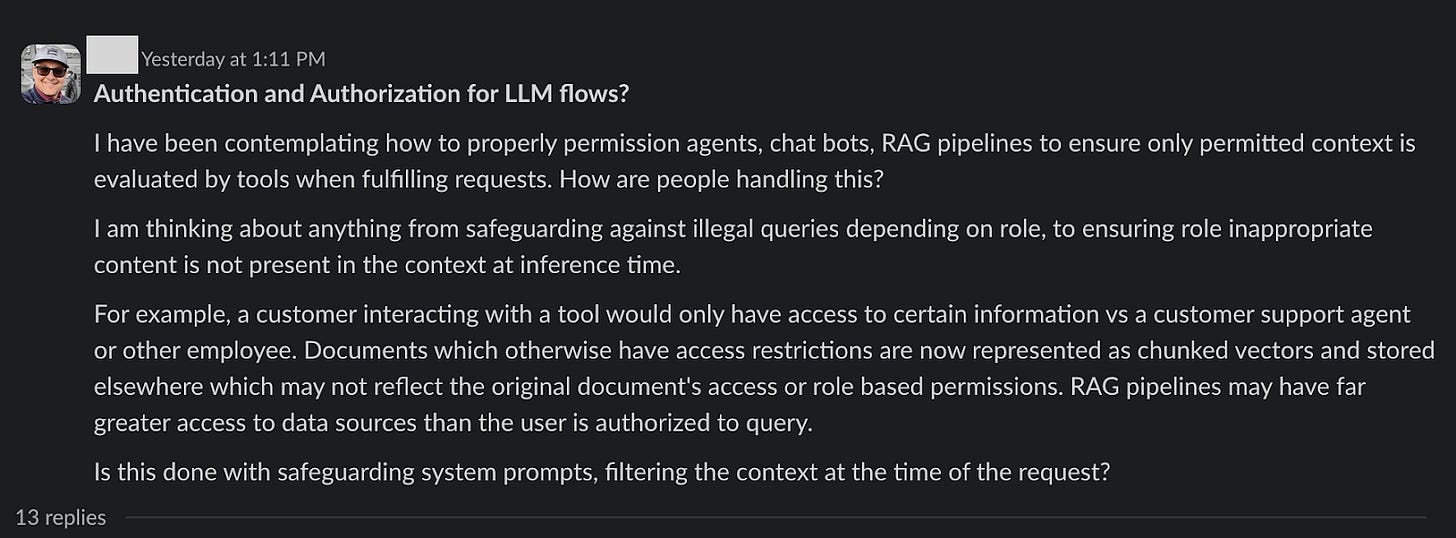

In a recent discussion in the mlops-community, a member raised a critical issue: how do we secure AI agents without breaking usability?

So, what do other builders think? 🤔 Let’s see! ⬇️

⏩ Quick Summary:

AI agents now handle critical enterprise data—but are they secured like human users? Traditional RBAC (Role-Based Access Control) works well for human users and service accounts, but AI agents introduce new security risks that require fresh solutions.

For teams that want to handle authentication in AI workflows, the best approach combines pre-index access filtering, post-retrieval checks, and structured access policies to safeguard data.

🔥 Best Practices from Other Builders Handling Authorization with AI Agents

1️⃣ Pre-index Filtering (Before Vectorization): Amy Bachir recommends enforcing access policies before chunking and embedding documents to ensure only permitted data gets indexed (for the authorized user groups or roles).

2️⃣ Post-retrieval Filtering (Before Inference): Filter the retrieved vectors based on user role in your workflow before sending them to the LLM, using metadata like {doc_id, access_level, user_roles}.

3️⃣ Query Guardrails & Prompt Filtering (Not a Solution): System prompts can instruct the model not to reveal unauthorized data. However, you should not rely on them as the primary enforcement mechanism—they are weak enforcement mechanisms. Filtering before inference is key—preventing unauthorized data from ever reaching the LLM is a safer practice.

4️⃣ LLM-Aware Auth Strategies: Will Callaghan pointed out that existing auth vendors focus on app-level permissions, not fine-grained retrieval access within LLMs. The industry is moving toward defining new authorization patterns for AI agents.

5️⃣ Using Open Policy Agent (OPA) & Vector DB Filtering: Amy Bachir also suggested combining vector database access controls with dynamic, fine-grained filtering via OPA to create a multi-layered security approach.

🔎 Is This a Solved Problem?

❌ Existing Auth Vendors: LlamaIndex and LangChain don’t yet offer built-in solutions for fine-grained retrieval access control—most frameworks focus on app-level permissions.

🌱 Early Solutions:

Vector DB filtering (Weaviate, Pinecone) for basic RBAC.

LlamaIndex + Open Policy Agent (OPA) for dynamic, fine-grained filtering before LLM inference (Amy Bachir).

New Research & Tools:

Papers outlining AI agent security on arXiv (Agent Security Models, Fine-Grained AI Access Control) (Will Callaghan).

Composio AgentAuth—an early attempt at AI agent authentication but still an integration tool rather than a fully-fledged security solution.

💸 VCs are Taking Note: Investors actively discuss the need for AI-native auth systems (LinkedIn post) (Will Callaghan).

🤔 The Debate: Are New Security Models Actually Needed?

Some members think LLMs can be treated like regular users in an RBAC system. Others think that agents need new permission systems because they can reason, act on their own, and synthesize data from different sources.

💬 Community Perspectives:

Cody Peterson: "Agents are just users with permissions, like any service account. Why do we need new security models?"

KK: "It’s not just about the consumer setting access—it’s also about data sources applying policies to AI agents, like YouTube enforcing content rules for children or travel platforms treating AI-driven bookings differently from human ones."

Will Callaghan: "We don’t necessarily need entirely new security frameworks, but we do need to rethink how agents interact within existing systems."

📢 What's Next?

As LLMs and AI agents take on more autonomous decision-making roles, the industry is still figuring out how to enforce granular access control while ensuring compliance.

Right now, pre-index filtering, metadata tagging, and post-retrieval validation seem to be the best strategies.

🔎 How is your team securing AI agents? Do you think AI agents should follow traditional RBAC, or do we need entirely new security models? Drop your experiences in the comments. 🚀

We created ⚡ BuildAIers Talking to be the pulse of the AI builder community—a space where we share and amplify honest conversations, lessons, and insights from real builders. Every Thursday and Friday!

📌 Key Goals for Every Post:

✅ Bring the most valuable discussions from AI communities to you.

✅ Foster a sense of peer learning and collaboration.

✅ Give you and other AI builders a platform to share your voices and insights.