[Infographics] OpenAI Responses API—Built-in Tools, Agentic State and Semantic Streaming

Visual Assets: Why OpenAI’s new Responses API might replace Chat Completions for agent-heavy MLOps workflows.

The OpenAI Responses API is a 2025 upgrade to how developers interact with LLMs—designed specifically for agentic workflows.

The API combines the declarative message format of Chat Completions (familiar, stateless message interface) with the agent tooling born in the Assistants API (tool support, state management, threads).

Out of the box, you get:

Built-in tools (web search, file search, image generation, Code Interpreter, computer-use).

Optional statefulness via previous_response_id (to resume a thread instead of manual thread objects)—or set store=false for pure stateless calls.

Semantic event streaming (tool events, deltas, start/finish hooks).

Remote MCP server support for unlimited external tools.

The result? A single, unified API that supports complex, multi-turn, tool-driven agents—without all the orchestration headaches.

Think of it as a one-stop agent platform that still fits into a single HTTPS call.

⚙️ How Does OpenAI’s Responses API Work?

1. Unified Interface:

Simplifies access to input, model, tools, and state management through a single API call structure (POST /responses.create).

2. Optional Stateful Conversations:

Retains conversational context automatically by referencing previous responses with previous_response_id=resp.id

3. Built-in Tools:

Directly integrates powerful tools, reducing manual orchestration:

Web Search (

web_search_preview)File Search (vector store integration;

file_search)Image Generation (native GPT-image model access;

image_generation)MCP Protocol Support (external knowledge bases and APIs;

mcp)

Each tool is a JSON object {type: "...", …}; the model decides when to invoke it.

4. Semantic Streaming:

Streams structured events instead of raw text chunks, providing context-aware outputs and status updates.

Quick Spin‑up (Python Example):

from openai import OpenAI

client = OpenAI()

# 1️⃣ first turn

resp = client.responses.create(

model="gpt-4o",

input="Summarize today’s top AI news in 3 bullets.",

tools=[{"type": "web_search_preview"}], # ← built-in search

stream=True # ← semantic SSE events

)

print(resp.output_text)

# 2️⃣ follow-up with memory + MCP

resp2 = client.responses.create(

model="gpt-4o",

previous_response_id=resp.id, # ← keeps context

input="Now fetch the source link for bullet #1.",

tools=[{

"type": "mcp",

"server_label": "deepwiki",

"server_url": "https://mcp.deepwiki.com/mcp",

"require_approval": "never"

}]

)

print(resp2.output_text)

That’s one endpoint, no extra state plumbing, and live web search if the model decides it needs it. Need to add RAG? First upload docs → vector store; then:

resp3 = client.responses.create(

model="gpt-4o-mini",

previous_response_id=resp2.id,

input="In one paragraph, explain MCP transport protocols.",

tools=[{

"type": "file_search",

"vector_store_ids": ["vs_MCP_docs"]

}]

)

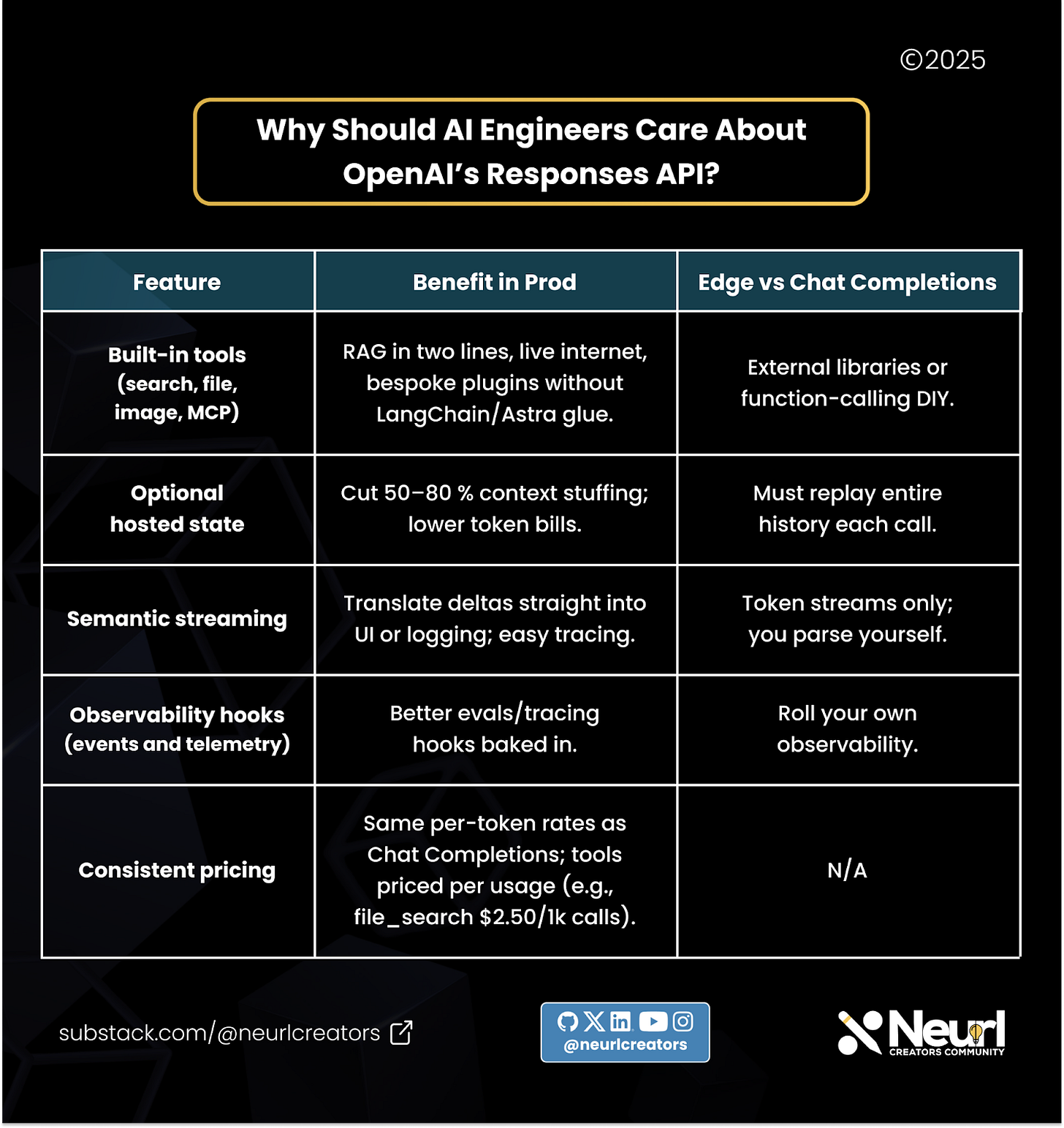

print(resp3.output_text)💡 Why Should AI Builders Care About OpenAI’s Responses API?

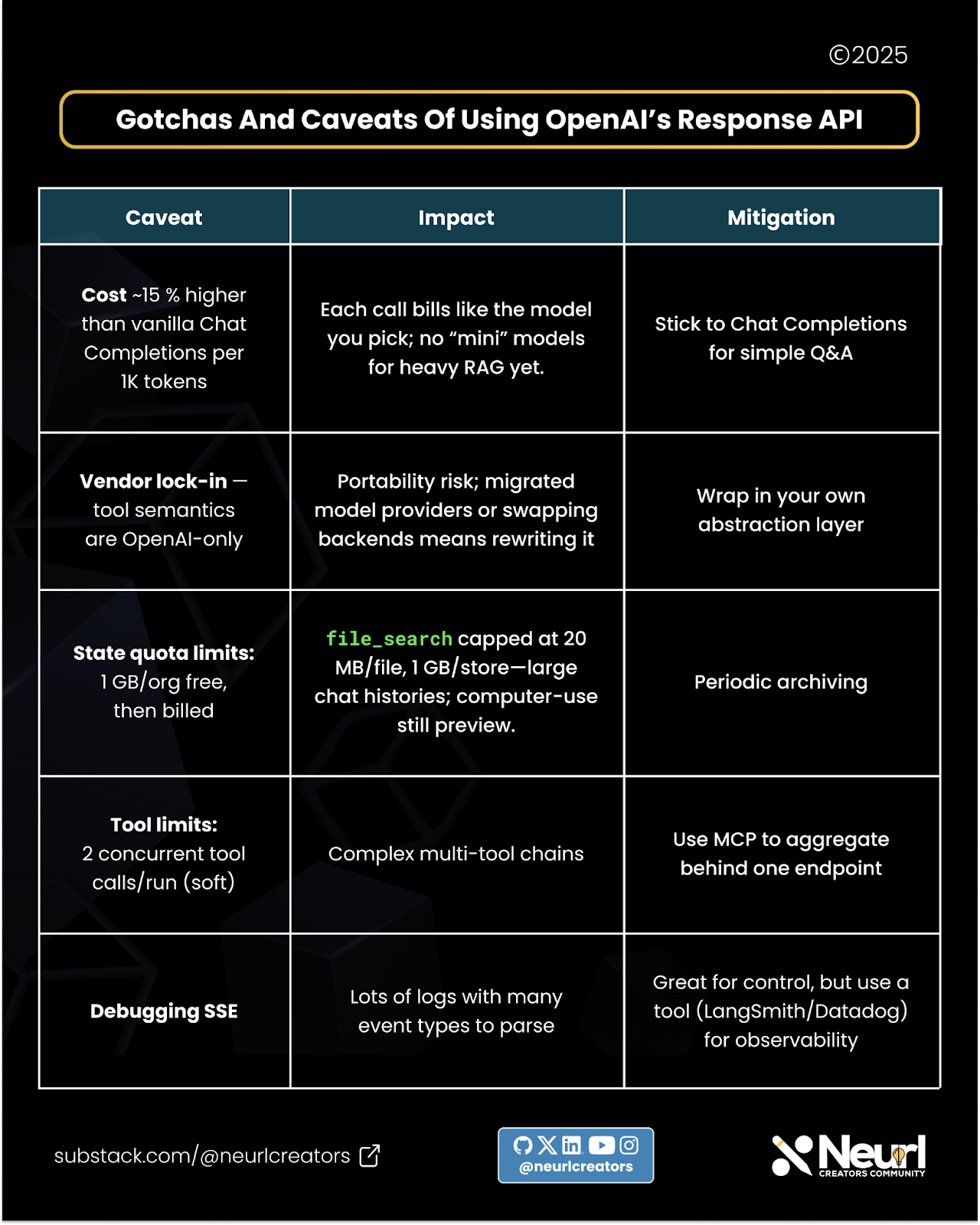

📉 Gotchas and Caveats of Using OpenAI’s Response API

Vendor lock-in: Tool calls, state store, and event schema are OpenAI-specific (as of this writing). Migrating to Anthropic or Gemini would mean rewriting the backend.

Tool quotas and pricing include: web search allows 20 calls per minute, costs range from $25 to $50 for every 1,000 calls, file search storage is billed after exceeding 1 GB; and the code interpreter charges $0.03 per session. Budget before you switch.

Model hopping quirks: Mixing

gpt-4oandgpt-4o-miniinside the same state chain or generally changing models mid-thread could cause you to lose stored context.Model choice: Only GPT-4o-family (4o, 4o-mini) and 3.5-turbo-2025-06 fully support all tools; older models fallback.

No custom tools yet: Only predefined types or MCP servers—it can’t register arbitrary local functions (for now).

And of course, the Response API is still < 3 months old. Expect breaking-change warnings, especially around event names.

💭 Community Pulse:

Here’s a perspective shared by Rahul Parundekar in MLOps.community:

The caveat with any lib, is that you need to wait for their code to catch up to a new model

E.g. new version of gpt launches, they don't have the working code yet for it.

A new interface launches (e.g. responses api) it takes the contributors effor to adapt

New capability comes in that other models don't support – retrofitting is what these libs do - e.g. "developer" role in chatgpt or a new image generation interface

If you have an LLM vendor in production, use their libs. If you are iterating, use one of these.

🏛️ Real-World Use Case: Contract Review Copilot

Lawyer uploads a 200-page PDF.

Front-end sends client’s questions via Responses API + file_search.

Model streams back clause-level citations with semantic events → UI highlights paragraphs live.

If clause references external law, model triggers web_search_preview for latest amendments.

Outcome: 8× faster first-pass review, no local vector database to maintain.

📉 Gotchas and Caveats of Using OpenAI’s Response API

🔍 How Does OpenAI’s Responses API Compare to the Previous Chat Completions API?

🧑⚖️ Final Verdict: Agent Builders—Yes, Prompters—Maybe

⭐ Rating: ⭐⭐⭐☆☆ (3.5/5)

OpenAI’s Response API is the fastest on-ramp we’ve seen to production-grade, tool-using agents. It obliterates a ton of boilerplate and observability debt, but in exchange you marry the OpenAI ecosystem more tightly.

If you’re fine living in the OpenAI ecosystem, the DX and built-ins pay for themselves quickly. But if multi-vendor flexibility or ultra-cheap endpoints are must-haves, stay on Chat Completions (or OSS) for now.

✅ Ship it if…

You’re prototyping an agent that needs live web, RAG, or remote tools in < 100 lines of code.

You want OpenAI-managed conversation state to simplify front-end code.

You’re already all-in on GPT-4o family models.

You need structured streaming events for richer interactions

🚧 Hold off if…

Your org needs cloud-agnostic infra — Responses-only features won’t port to Anthropic or Azure.

You stream > $50k / month of raw tokens, and every % cost delta matters.

Your traffic is 100 % simple Q&A with no need for custom on-prem tool calls (awaiting custom tool GA).

You prefer simpler, stateless APIs for basic applications.

🧠 Learn More

Official Release Post – OpenAI: https://openai.com/index/new-tools-for-building-agents/

Docs: Responses vs Chat: https://platform.openai.com/docs/guides/responses-vs-chat-completions

New tools blog: https://openai.com/index/new-tools-and-features-in-the-responses-api/

🧾 Our Substack Deep Dive: ResponseAPIs vs. Chat Completions:

OpenAI Response API vs Chat Completions: Which Should You Use for Your Next Build?

You're gearing up to build your next AI-powered application and have chosen OpenAI as your language model provider. You've likely worked with the powerful GPT models before, and now you're back in the docs, setting up your stack. But right away, you're faced with a fundamental question: Should you stick with the tried and true